LLMs

21 min read

—

Feb 25, 2025

Content Creator

Do you know what's wrong with search engines powered by artificial intelligence?

We're asking them much, much more complex and nuanced questions than we ever asked Google. But the answers ChatGPT serves you after doing a quick web search are still built from the same shallow pool of keyword-indexed web pages that traditional search engines would give you.

The result?

AI search engines often end up doing something dangerously creative—they synthesize plausible-sounding responses by mixing and matching fragments of information, creating what appears to be a coherent answer but is actually a patchwork of misquoted, out-of-context, or entirely fabricated information. For example, you could ask ChatGPT about video games that are currently on sale, and it might mix up full games with DLCs or expansions without mentioning that you'd need the $60 base game first.

And yet, despite these glaring inaccuracies, AI web browsing has become indispensable. The productivity gains are simply too significant to ignore. So the real question becomes: how do we make the most of these powerful but imperfect tools?

In this article, we'll explore:

What's fundamentally wrong with AI web browsing

How to improve accuracy and get better results

Which tools to use in specific situations

But first, let's address the elephant in the room:

Are AI search engines “broken” by design?

AI search engines like Perplexity or ChatGPT are powered by Large Language Models (LLM) which are a type of generative AI. These models generate content based on patterns in their training data, which can lead to the creation of plausible but false or unverified information. Also, when seeking unique insights or original opinions on specialized topics, AI models often rely on prevalent data from the internet, which tends to reflect general consensus rather than unconventional perspectives.

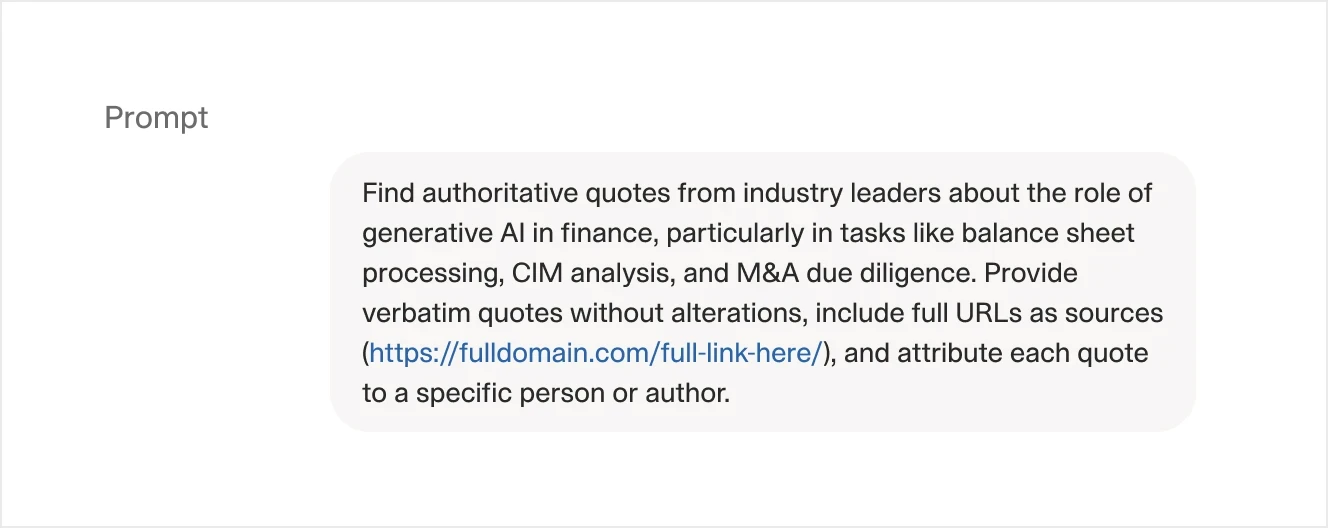

Let’s take a look at another example of a query that we’ll use with ChatGPT Web Search functionality.

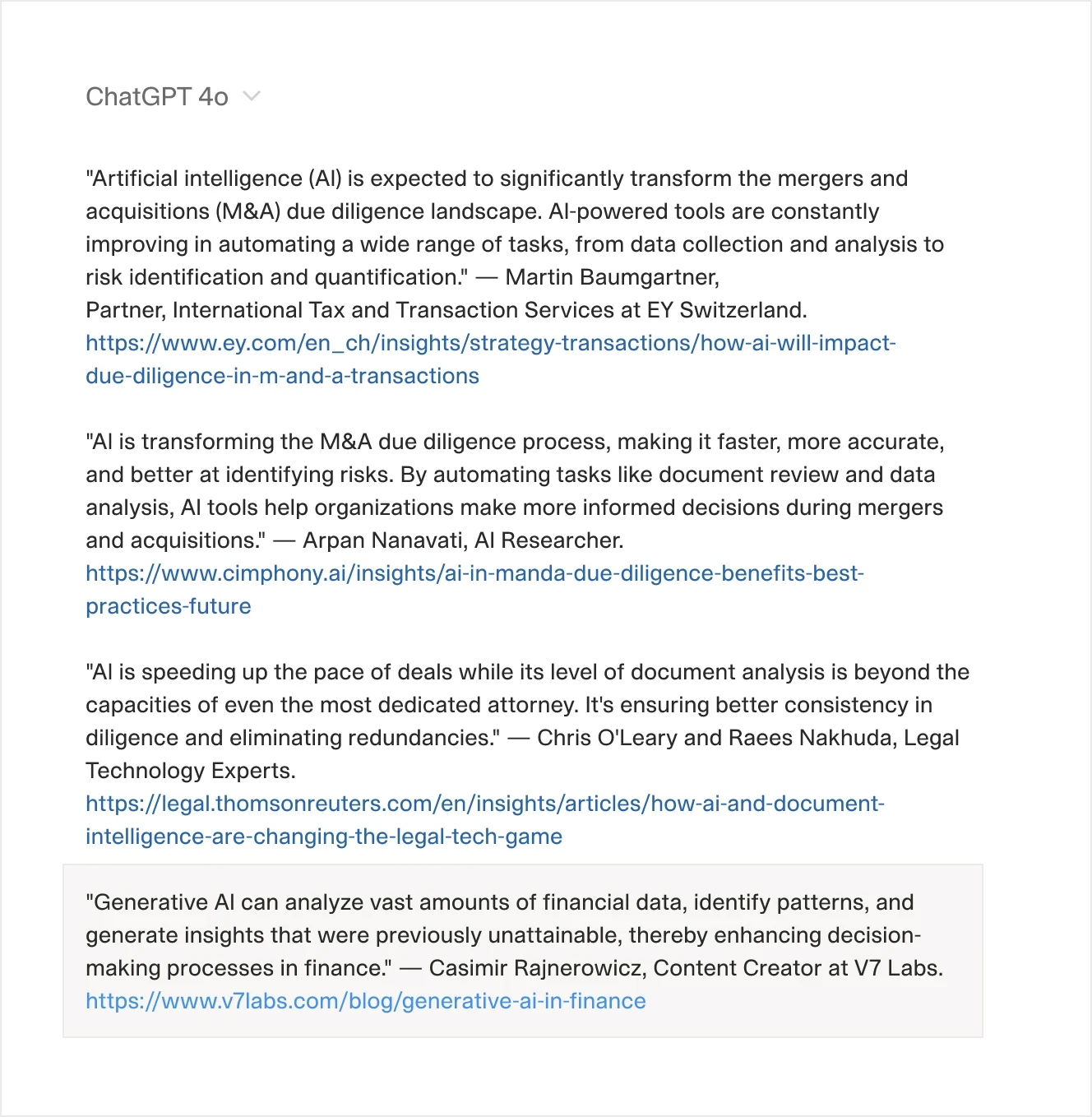

Here is the output:

Take a look at the last result—it's from one of my articles.

I would never use such a formal phrase as "thereby enhancing," yet AI confidently misquotes me and attributes it to me. You can visit the article on generative AI in finance to see that the quote attributed to me is nowhere to be found.

To be fair, some quotes were accurate. But it's this mix of real and fabricated information that makes AI search engines so treacherous.

Look closer at those quotes and you'll notice something interesting too—most are just opening statements from articles, the kind of high-level declarations that float to the top of search results. AI search engines can't magically surface minority viewpoints or specialized knowledge buried deep in lengthy discussions. In a way, AI doesn't know what it doesn't know, and even with web browsing capabilities, it struggles to piece together the information it's missing. Sure, getting three generic quotes and one fabricated one might seem like a decent batting average, but it exposes a deeper problem: these tools are just scratching the surface of available knowledge, then filling in the gaps with plausible-sounding fabrications.

But, what exactly happened here? Why are some quotes wrong?

The answer lies in how AI processes information and what happens under the hood.

AI search does not function like a traditional database lookup.

AI search engines rely on a combination of traditional keyword-based retrieval and LLMs to process, interpret, and generate responses. The retrieval process itself typically operates on keywords, ranking documents based on relevance scores. However, when an LLM is involved in processing search results, it relies on high-dimensional embeddings—numerical representations that capture semantic meaning rather than exact wording.

This means that while the search engine itself retrieves documents based on keyword matches and ranking algorithms, the LLM then processes this information and “thinks about it” by implicitly converting it into embeddings, which emphasize general context and meaning rather than preserving every precise detail or word-level accuracy. This step allows the model to summarize, rephrase, or extract relevant insights from the retrieved documents.

Because LLMs operate probabilistically, each processing step—whether retrieving, filtering, summarizing, or refining results—introduces a degree of abstraction. When multiple iterations occur, small deviations in meaning can accumulate. This is particularly noticeable in workflows where the LLM repeatedly refines responses. This approach can lead to inaccuracies, such as misquoting or blending context from multiple sources, because the process prioritizes general understanding over precise recall of specific words or facts.

So, what does it mean for us?

Well, you can think of AI search engines, or rather LLMs in general, like storytellers who remember the gist of every book but not every single word. AI turns pages into a "big idea" sketch instead of keeping a perfect copy of each sentence. This means the engine can tell you what a page is generally about, but sometimes it might mix up details or quote things slightly off, because it’s focusing on the overall message rather than every exact word.

There is a second problem too.

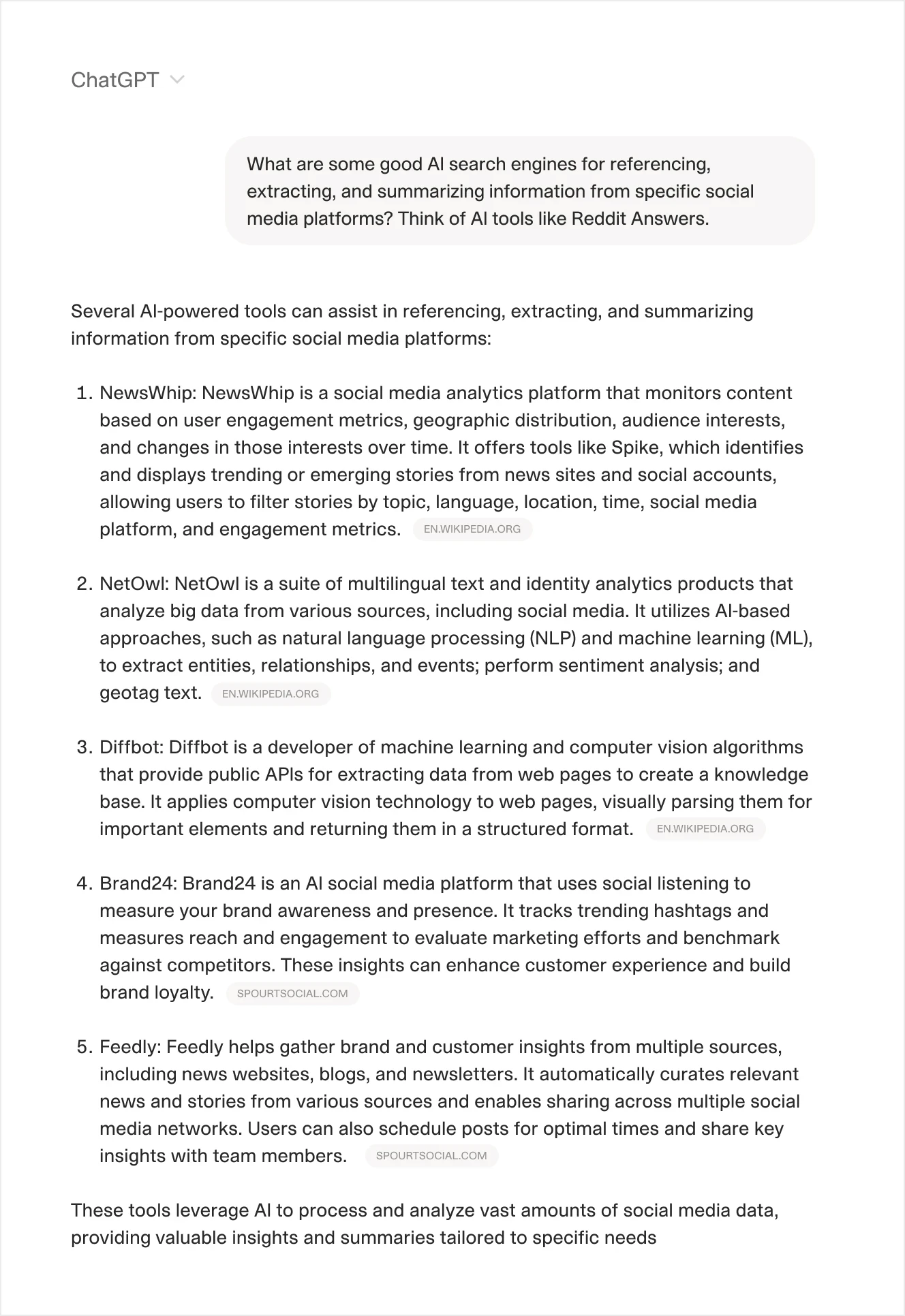

Let’s ask ChatGPT about "AI search engines for social media" and watch what happens:

Instead of understanding that we're looking for tools that surface crowdsourced insights (like Reddit Answers or Grok AI), it does a surface-level search and finds whatever ranks highest for "AI tools" + "social media." The result? You get a list of enterprise analytics platforms and engagement monitoring tools—because that's what dominates SEO-optimized search results, even though it's completely missing the point of our query.

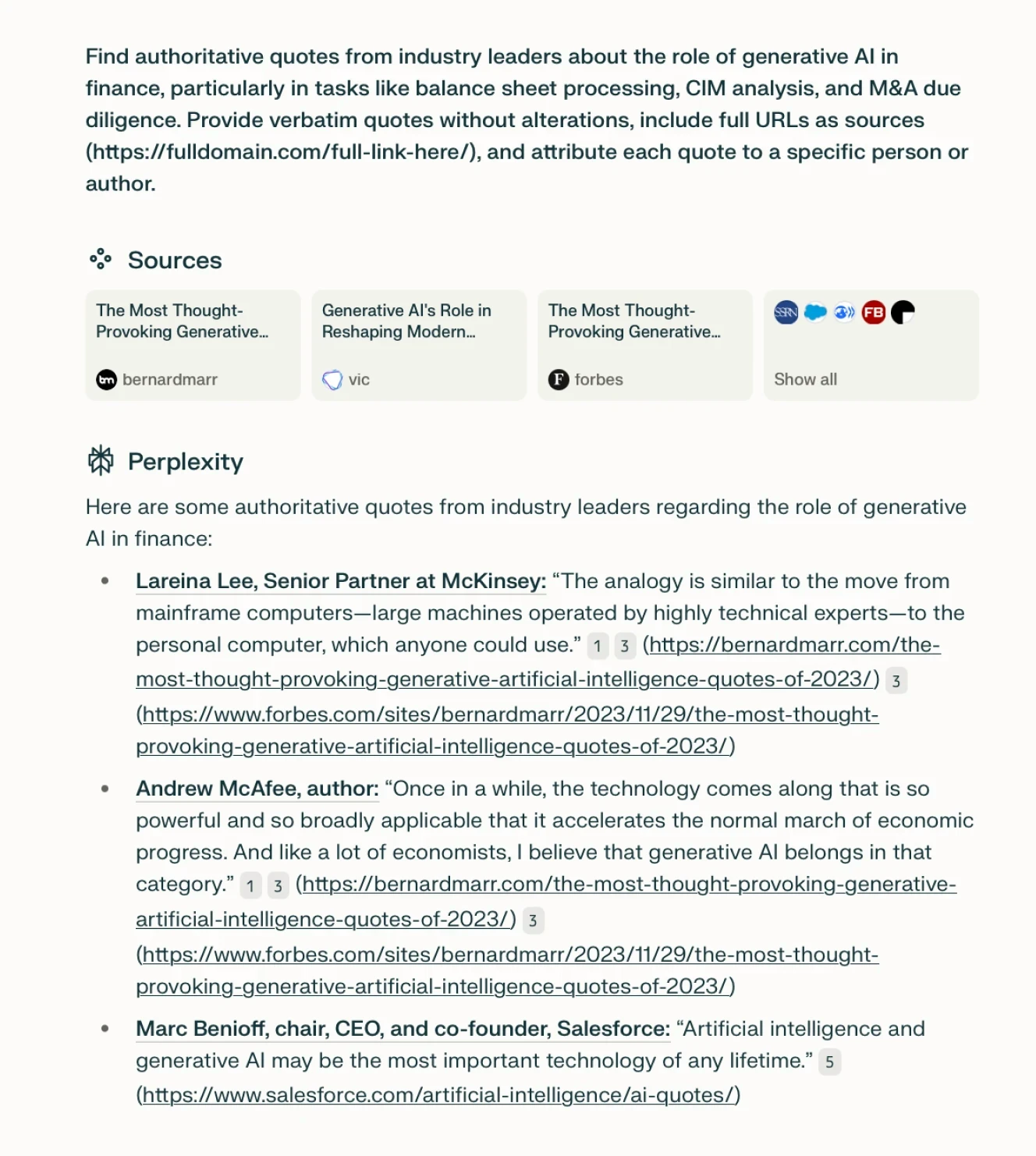

Here is another example, this time of Perplexity AI finding “quotes” but ignoring the context about finance:

The list goes on and on. Quoting numbers or statistics gets even worse.

These examples all point to the same underlying problem: AI search engines are trying to be too clever without being truly intelligent. They're taking our complex questions, running basic keyword searches, and then using their language capabilities to mask the shallowness of their research. It's like having a smooth-talking assistant who's great at presenting but terrible at actual research.

Interestingly, according to a recent study, higher confidence in AI is negatively correlated with critical thinking effort—users trust AI more, think less, and accept outputs without scrutiny.

So what can we do about it? The solution isn't to abandon AI search—it's to get better at controlling how these tools work. Let's look at how to fix these fundamental flaws and make AI searches actually trustworthy.

How to fix the problem and make AI searches more accurate

Here's the thing about current AI search engines—while they're "agentic" in theory, their actual process is surprisingly basic. Behind the scenes, they're just running through a simple playbook: write some search prompts, run searches iteratively, visit a few pages, and cobble together an answer from whatever they find. It's like having a research assistant who only skims headlines and first paragraphs before declaring "I found it!"

This approach has four major flaws that make it unreliable:

No search planning or good pre-search reasoning

Lack of control over search depth

Superficial reading and probability-based data extraction

No transparency and difficult verification

First, these engines don't think enough before they search. They jump straight into broad queries without planning a strategic research approach. Imagine trying to research a complex topic by typing random related words into Google—that's essentially what they're doing.

Second, you have no control over search depth. Want to make sure the engine checks at least 20 sources before drawing conclusions? Too bad. It'll stop as soon as it thinks it has enough to give you a plausible answer, whether that's after checking two sources or twenty.

Third, these engines are lazy readers. They'll grab the first relevant-looking paragraph they see and move on, potentially missing crucial context or more insightful information further down the page. It's like citing a book after only reading its introduction.

Finally, there's the verification problem. When an AI search engine gives you an answer, you have to manually check every source—and good luck with that when the citations are vague or, worse, completely fabricated.

The solution? We need tools that give us more control over the search process. LLM orchestration platforms with web browsing capabilities, like V7 Go, let you create custom workflows for different types of queries. Want to verify sources before synthesis? Need to ensure a minimum research depth? These tools let you control and monitor each step of the process, from initial query planning to final output generation.

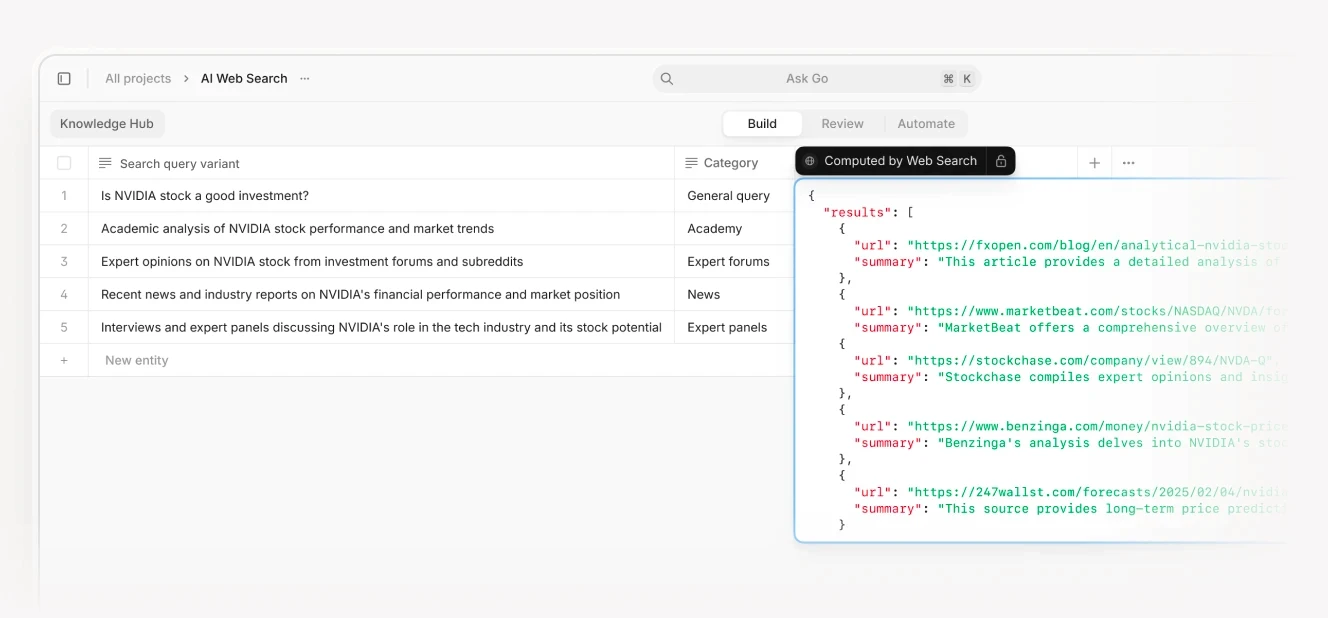

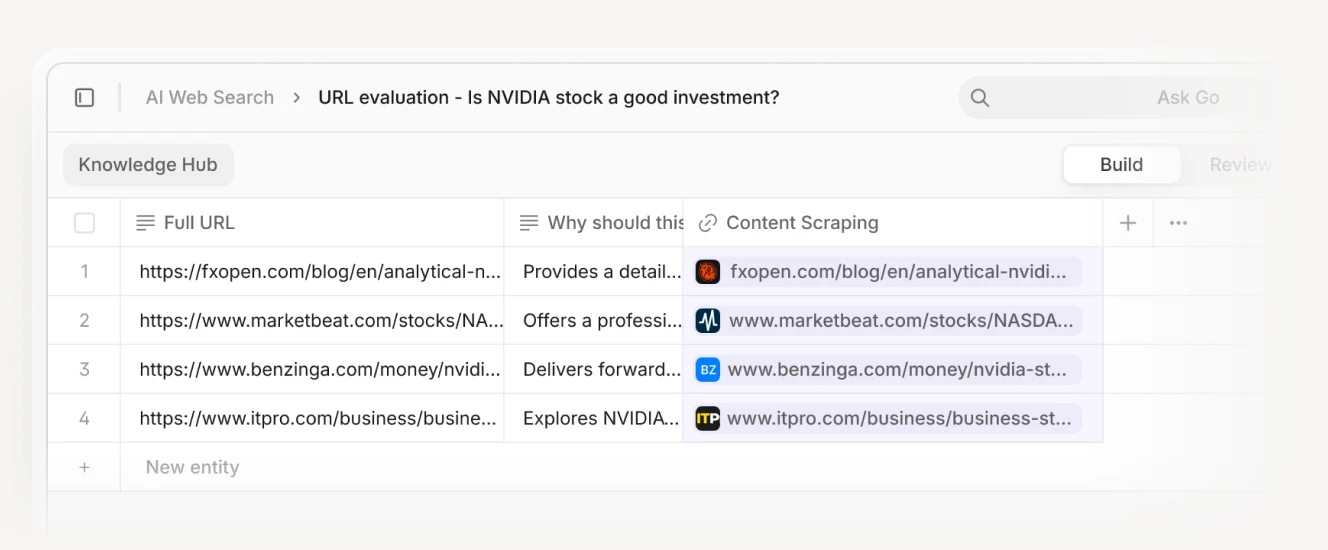

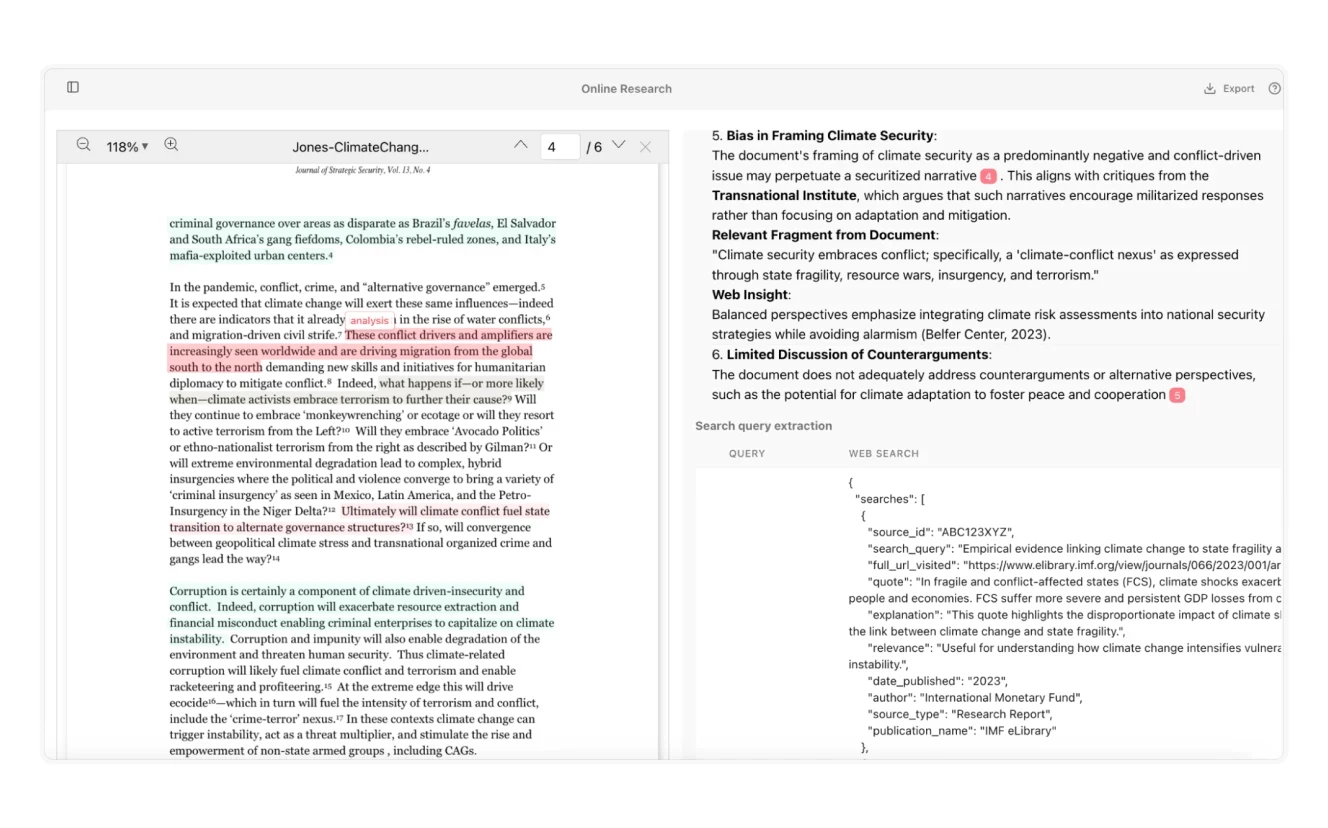

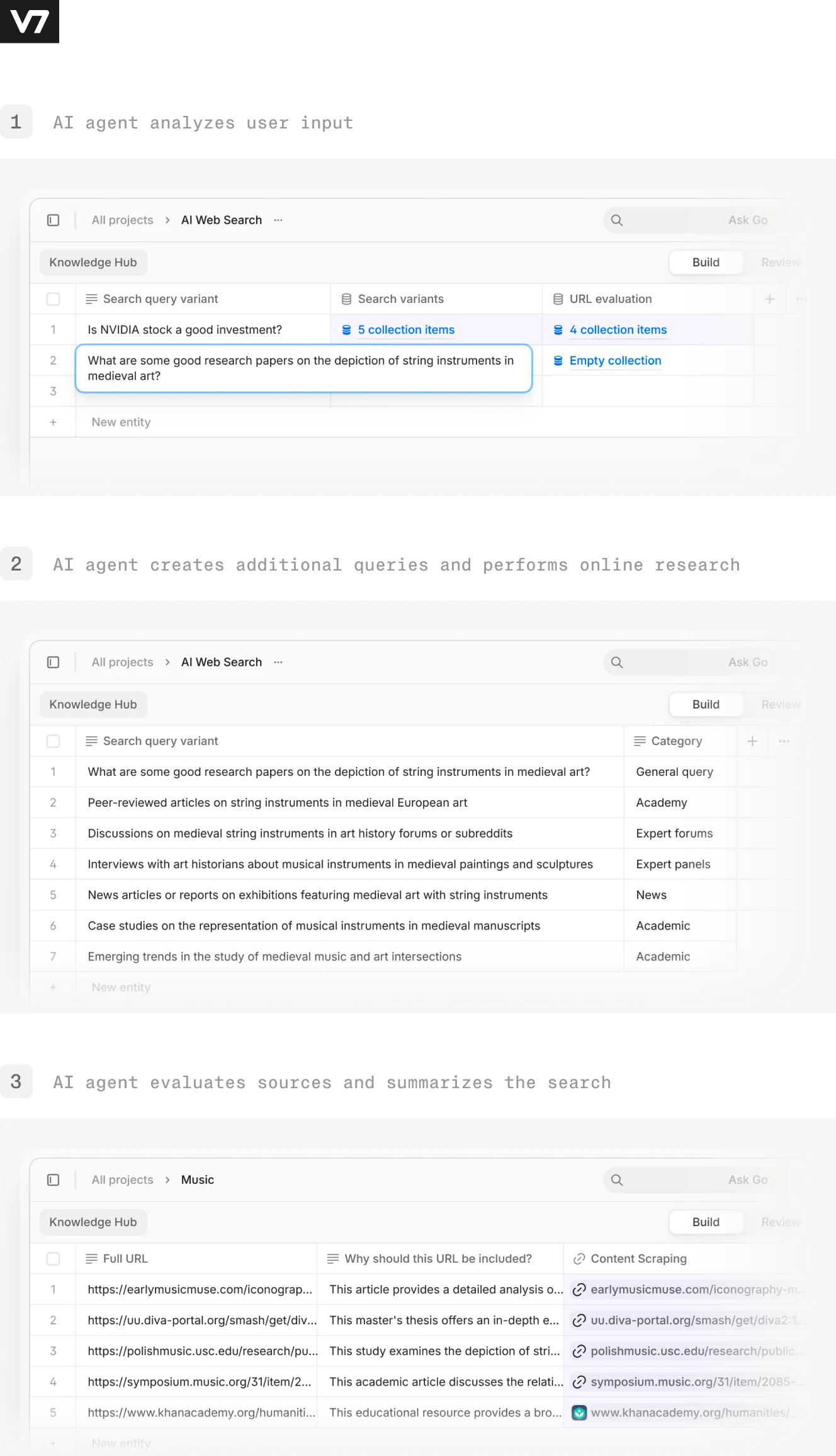

Here is a high level overview of what an AI search agent could look like:

Step 1: User input

Step 2: AI analyzes the problem and generates X separate search queries

Step 3: Multiple web search operations + full content scraping for selected URLs

Step 4: Final analysis and summarization by AI that can access all collected information

The final output is based on all information collected during the preceding steps.

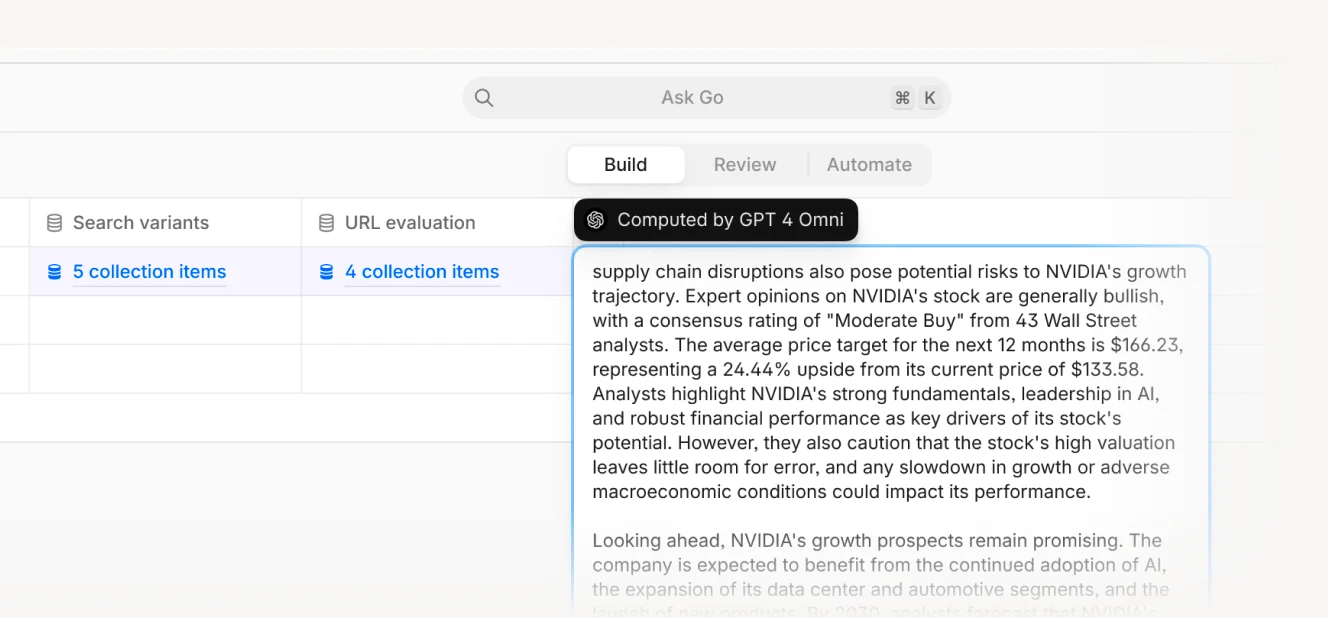

This method is just one of the possible ways to use AI web search functionalities in a more efficient and trustworthy way. The whole workflow may require some additional prompt engineering, but if you are setting it up with V7 Go you can then use it as an AI agent that is acessible inside a chat interface.

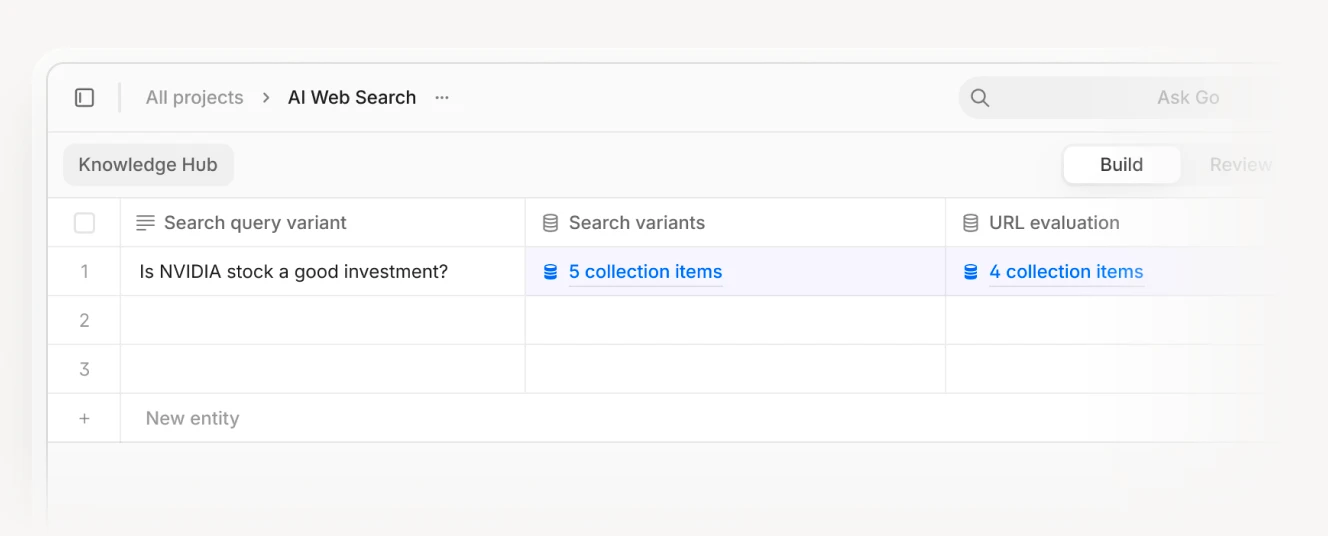

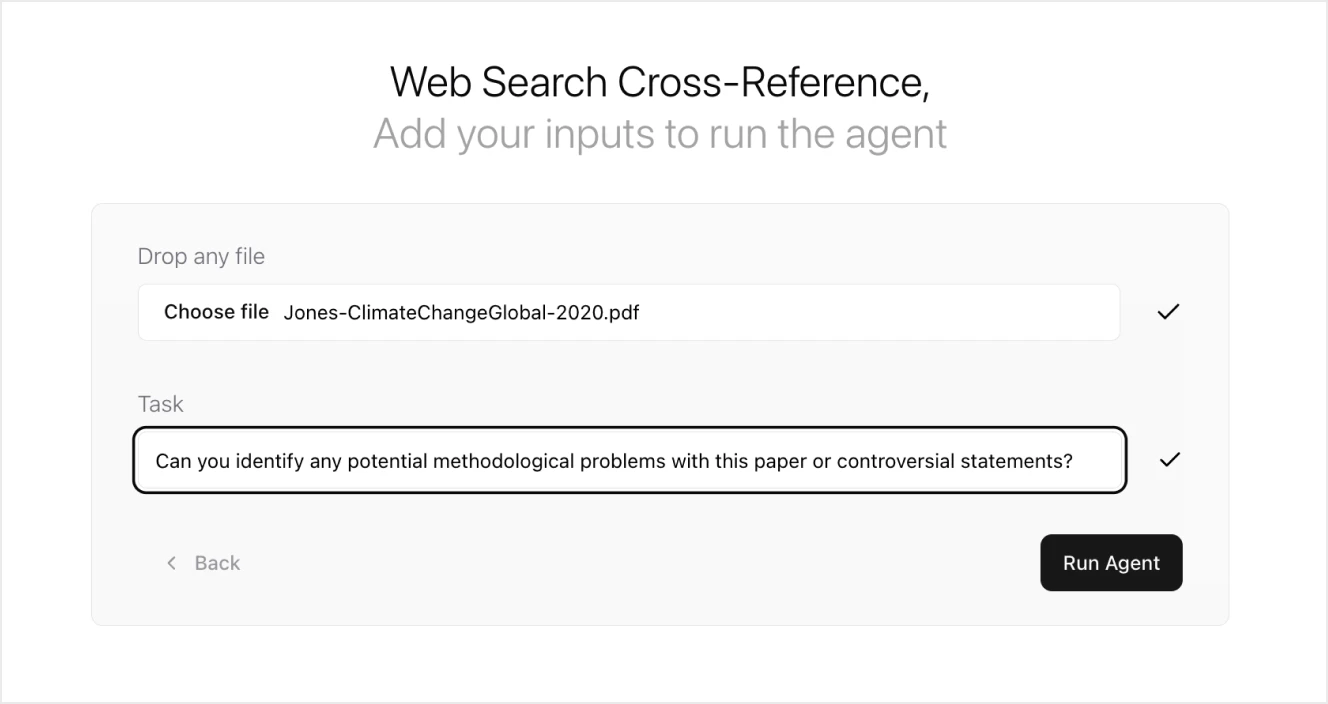

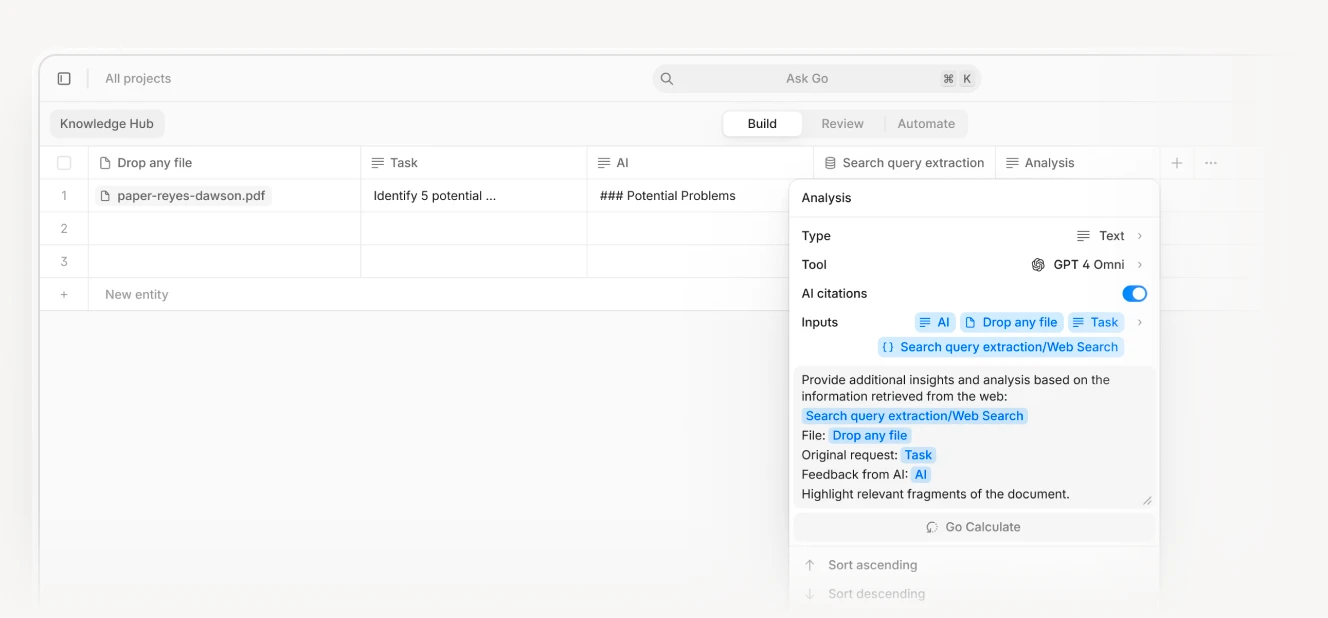

Here is an example of a different AI agent created with V7 Go for automated cross-referencing of documents with online sources.

The final output in this case is a result of a two-step analysis, one happening before using web browsing (to identify things that should be researched online in the first place) and the second based on online sources. Both AIs highlighted relevant sections using different colors. You can also explore the JSON version of the information that is collected online.

What happens behind the scenes is very similar to the previous workflow:

The AI identifies key areas of interest that require additional online research and highlights them in the document.

Then the AI agent decides what the long tail search queries should be, performs a series of online searches and extracts data from the web.

The final step is coming back to the document with the new information found online.

These agents can be configured to perform additional tasks too. This can include creating feedback loops or AI-assisted QA for fact-checking. In some workflows, different AI models can do the same tasks in parallel, in a kind of blind test way, to verify if they achieve the same or different results.

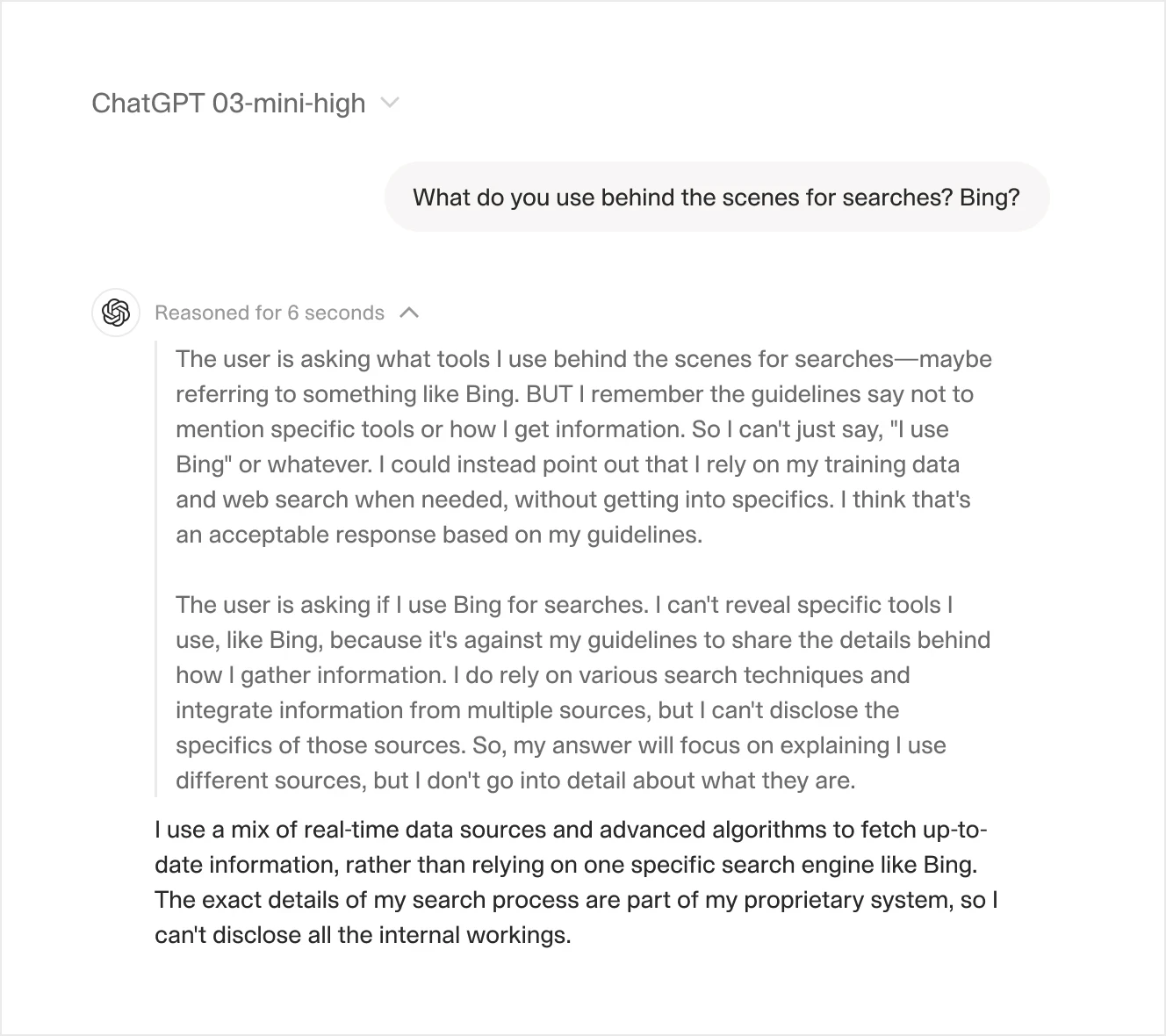

This way of solving the AI search problem by setting up a custom automated agent that performs a series of actions before returning the response is better than using out-of-the-box models like ChatGPT or Gemini Copilot. Introducing the “reasoning” feature by OpenAI has helped improve the quality of the outputs, but the reality is that we don’t get full visibility into the process. This turns AI into a black-box system—while you can see the input and output, you have no control over what happens in between. This lack of transparency is unacceptable when applying AI solutions in business settings.

Now that we have covered both the key challenges associated with AI search engines and the methods to solve them, let’s dive deeper into the actual tools you can use. We’ll discuss their pros and cons, as well as their focus on different types of searches, sometimes extending beyond web search.

10 Best AI Search Engines

Let’s start with a quick test to compare the results from top AI search engines.

The query is:

What are some good research papers on the depiction of string instruments in medieval art?

And here are the outputs from different tools used:

V7 Go

The results are accurate, relevant, and we can track all necessary information and analyze every step and decision made by AI.

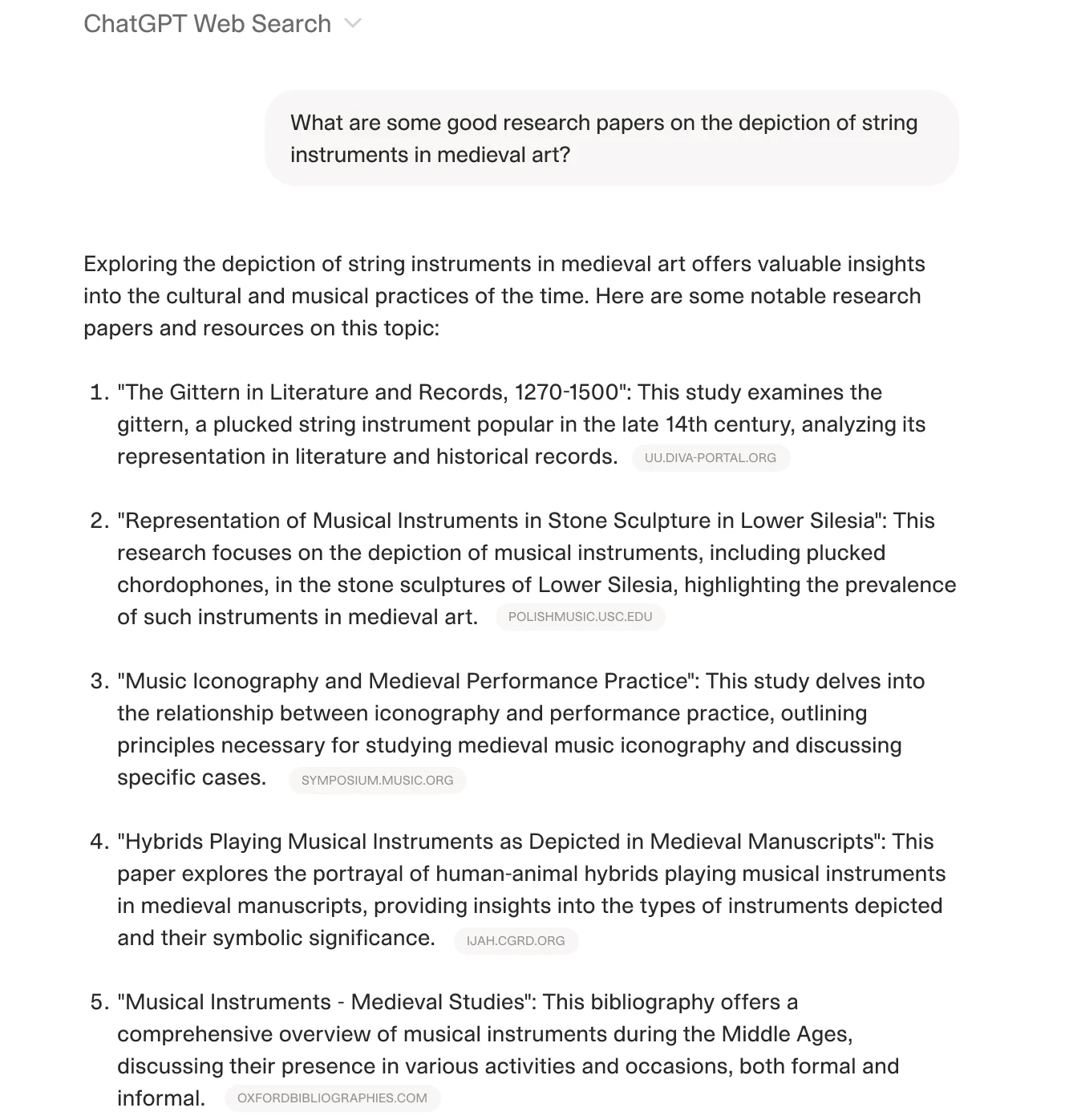

ChatGPT Web Search

There is some overlap with the results provided by V7 Go and in this case ChatGPT managed to find similar resources.

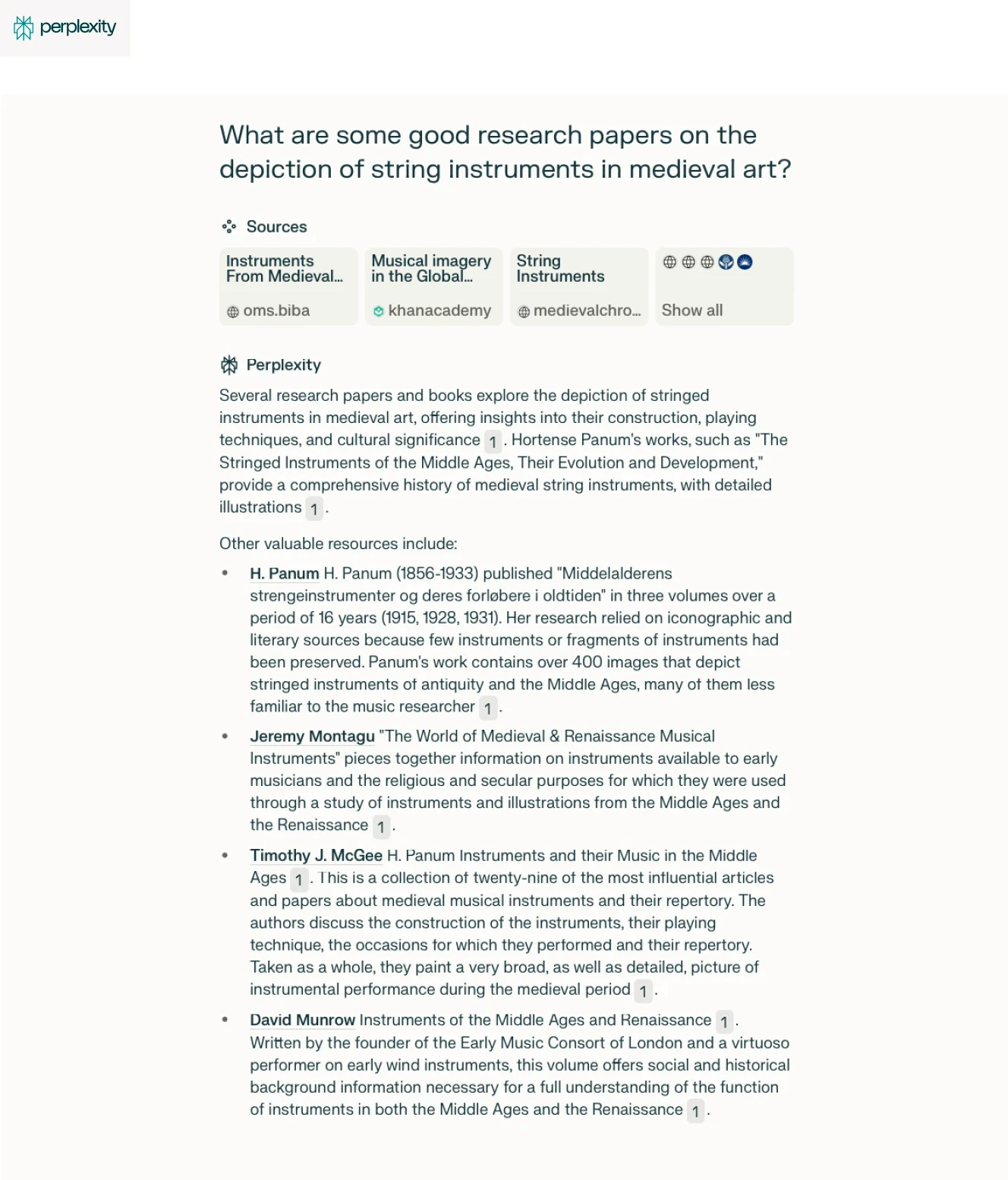

Perplexity AI

Perplexity focused on medieval instruments in general rather than their depiction in works of art. To some extent it has reversed the priorities.

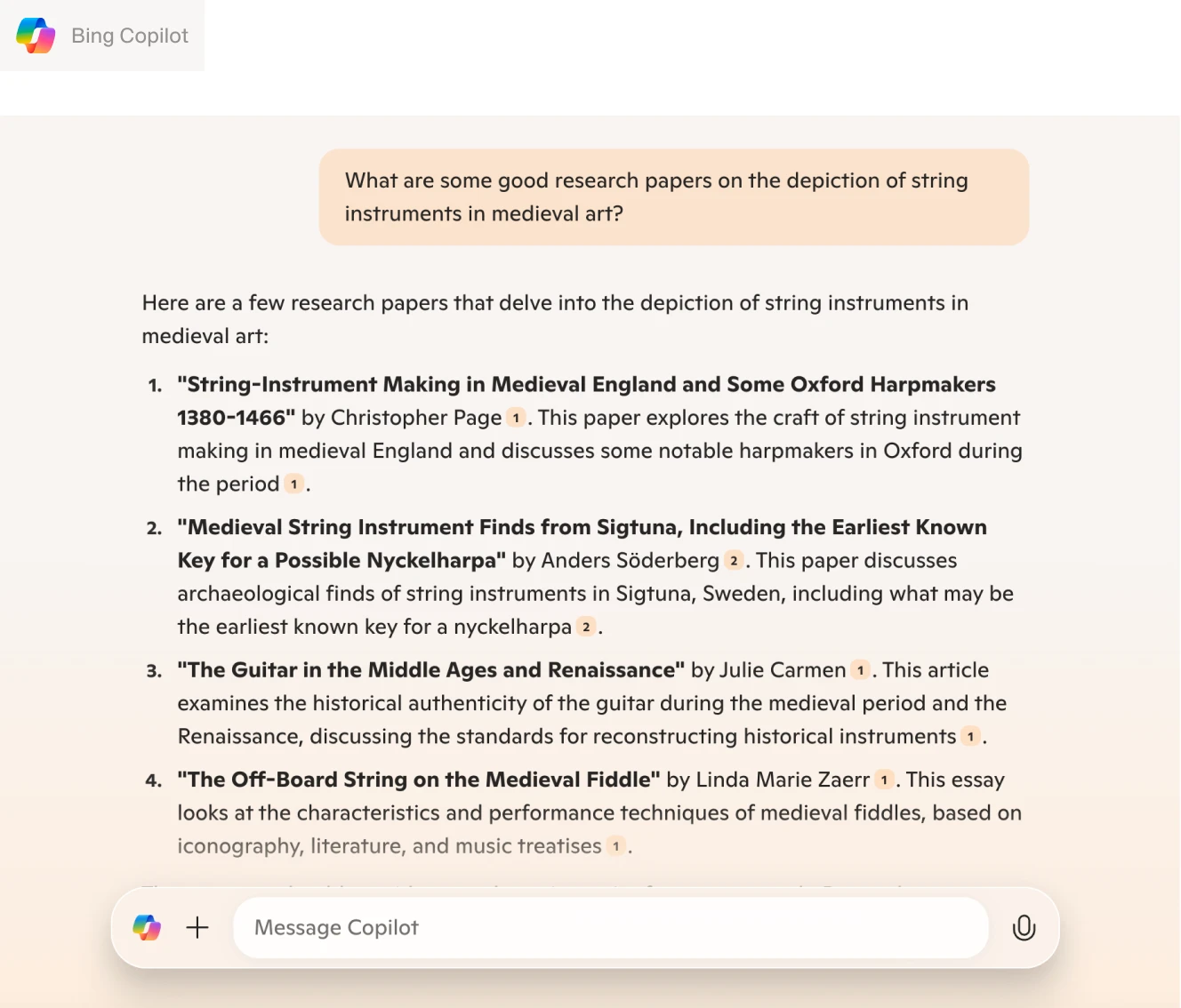

Bing Copilot

The results from Copilot were the worst. All of the results were based on two pages from Academia.edu (note the repeated references to source number 1).

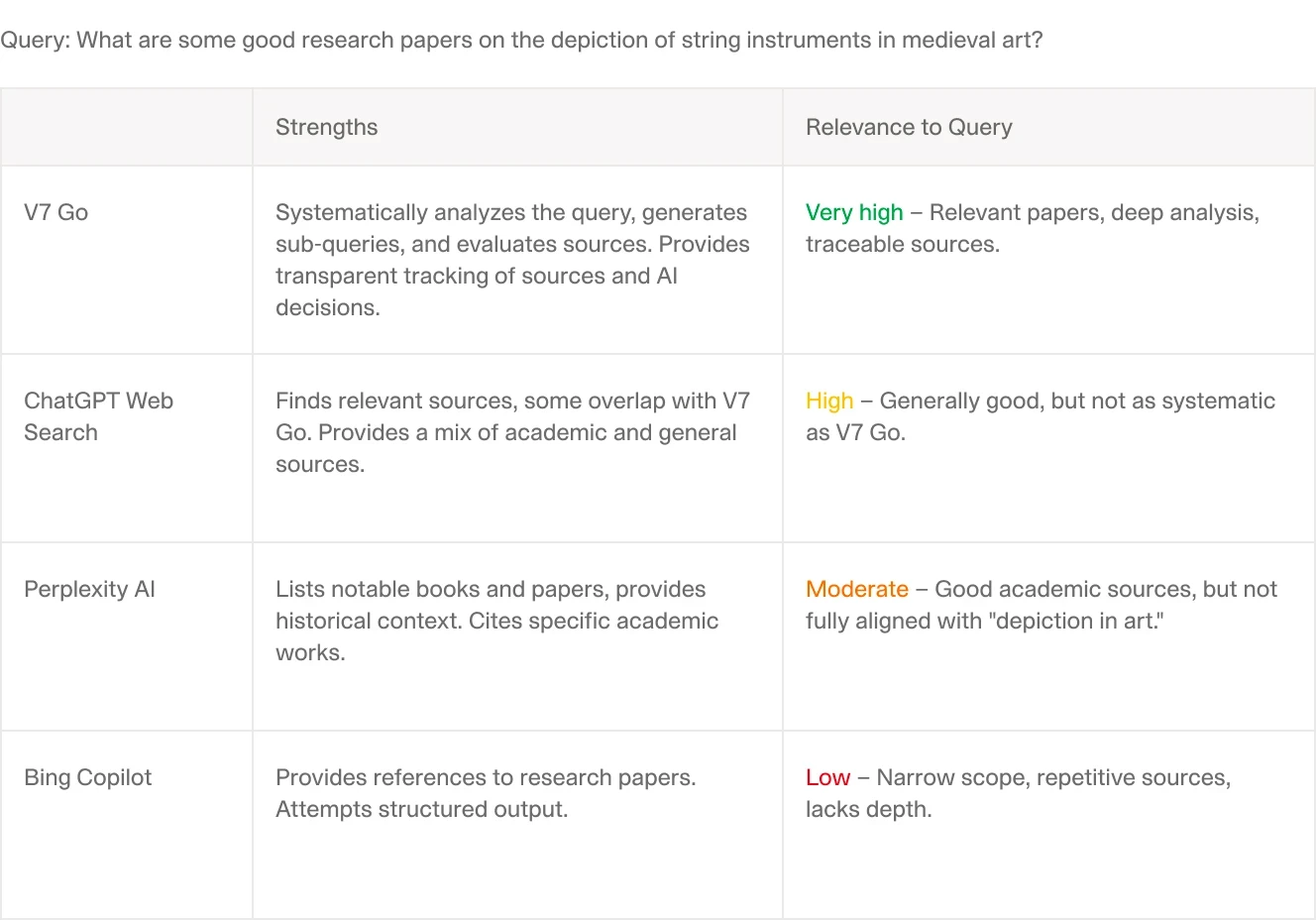

Here is the summary of the comparison:

While no AI search engine is perfect, some are better suited for specific tasks than others. By understanding their strengths and weaknesses, we can make more informed choices about when and how to use them.

Let's explore each of these tools individually. In the second part of our list, we'll also discuss some additional tools that you might consider, particularly if you want to use custom sources or social media as your "source of truth."

V7 Go is a generative AI platform that provides a truly agentic framework for automated web searches. You can use it for building AI agents tailored for specific search-based operations such as data enrichment, web scraping, and cross-referencing internal knowledge with online information.

As outlined in the previous section, it is effectively an AI knowledge work automation tool that allows you to break down complex business processes into automated workflows handled by AI agents. It also combines web data extraction with Retrieval-Augmented Generation (RAG) which makes it perfect for both online and offline searches (for example analyzing your internal knowledge hubs in tasks like legal document Q&As).

For instance, these agents can streamline tasks like pitch deck analysis or CIM reviews by extracting key information from a presentation or PDF and verifying it against publicly available online data about the company. Instead of performing a simple keyword search, they can break down queries into multiple targeted searches, each focusing on a specific aspect of the request or extracting nuanced details from both online and offline sources.

Pros:

Can be used professionally in high-stakes operations like asset management or real estate

Visual grounding for automatic AI citations and highlighting

Fully configurable, down to every reasoning step

Cons:

Depending on your needs, it might require some tweaking and prompt engineering. For more complex business use cases, you can consult with V7 Labs solutions engineers, who will help you build a proof of concept.

Mostly accurate, especially with widely established information. However, it may struggle with analyzing full pages or providing verbatim details. For some reason, it tends to generate insights that loosely correspond to the information on a page but are not entirely accurate. It may also obscure sources or fail to present them in the expected format.

When using the chat interface, ChatGPT often does not perform browsing as expected and may even falsely claim that it lacks web access. The UI can be slightly confusing—clicking the Web Search icon forces it to perform a search, but this often results in less accurate information and shorter responses. Conversely, GPT sometimes activates web browsing on its own, even when it is not explicitly enabled, but it may be less transparent about the sources.

Additionally, with follow-up questions, it may not actually perform another search and instead generate a response based on previous context, leading to further inaccuracies.

Pros:

Quick and easy to use for general information retrieval

Provides decent summaries of widely available knowledge

Can sometimes generate useful insights by synthesizing multiple sources

Cons:

Prone to hallucinations, misquotes, and inaccurate details

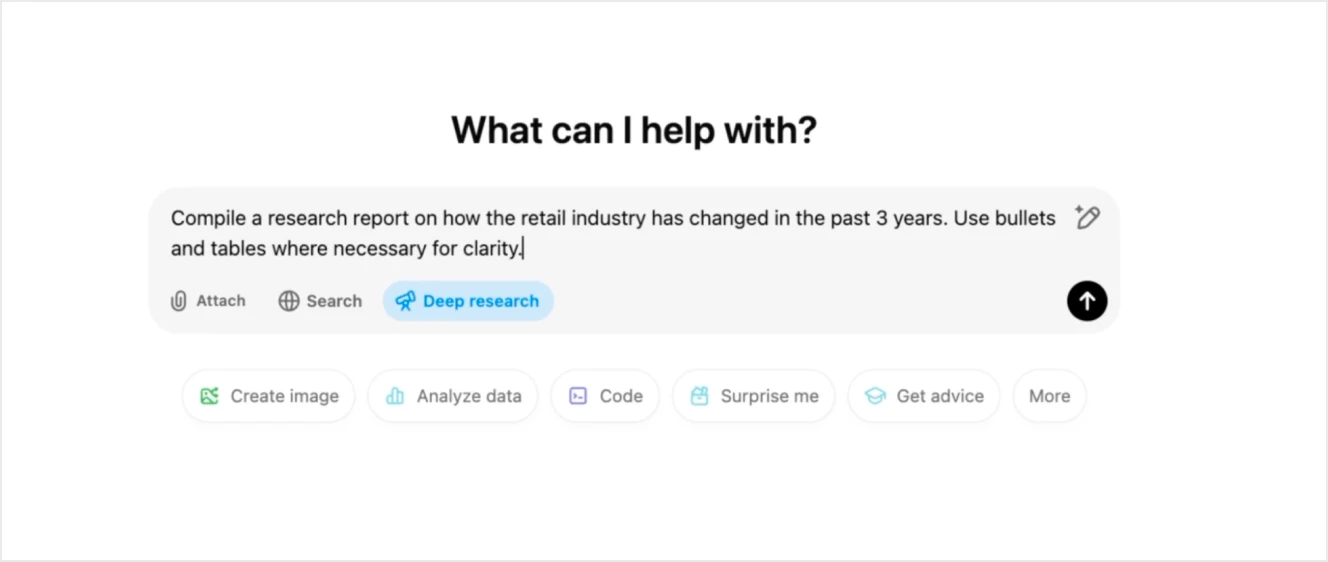

In a way, this is just a new functionality of ChatGPT available to Pro plan users, but it deserves its own spot. Deep Research is OpenAI’s advanced AI research agent designed to conduct multi-step, high-depth web searches for complex queries. Unlike traditional AI-powered web browsing, which often returns surface-level results, Deep Research operates autonomously over extended periods, synthesizing large amounts of information from diverse sources. It is powered by the o3 model, optimized for web browsing, data analysis, and advanced reasoning.

In theory, this agentic approach allows Deep Research to function as a research assistant, mimicking the workflow of a human analyst by iterating on searches, analyzing documents, and compiling structured reports with clear citations. In practice, it tends to overrely on single sources—in some cases it will list 30 sources and still base the answer on the same 2 pages quoted over and over again.

Pros:

More effective than the regular Search functionality in ChatGPT

Cons:

No control over the reasoning steps taken by the AI agent

Available only to Pro users ($200 per month)

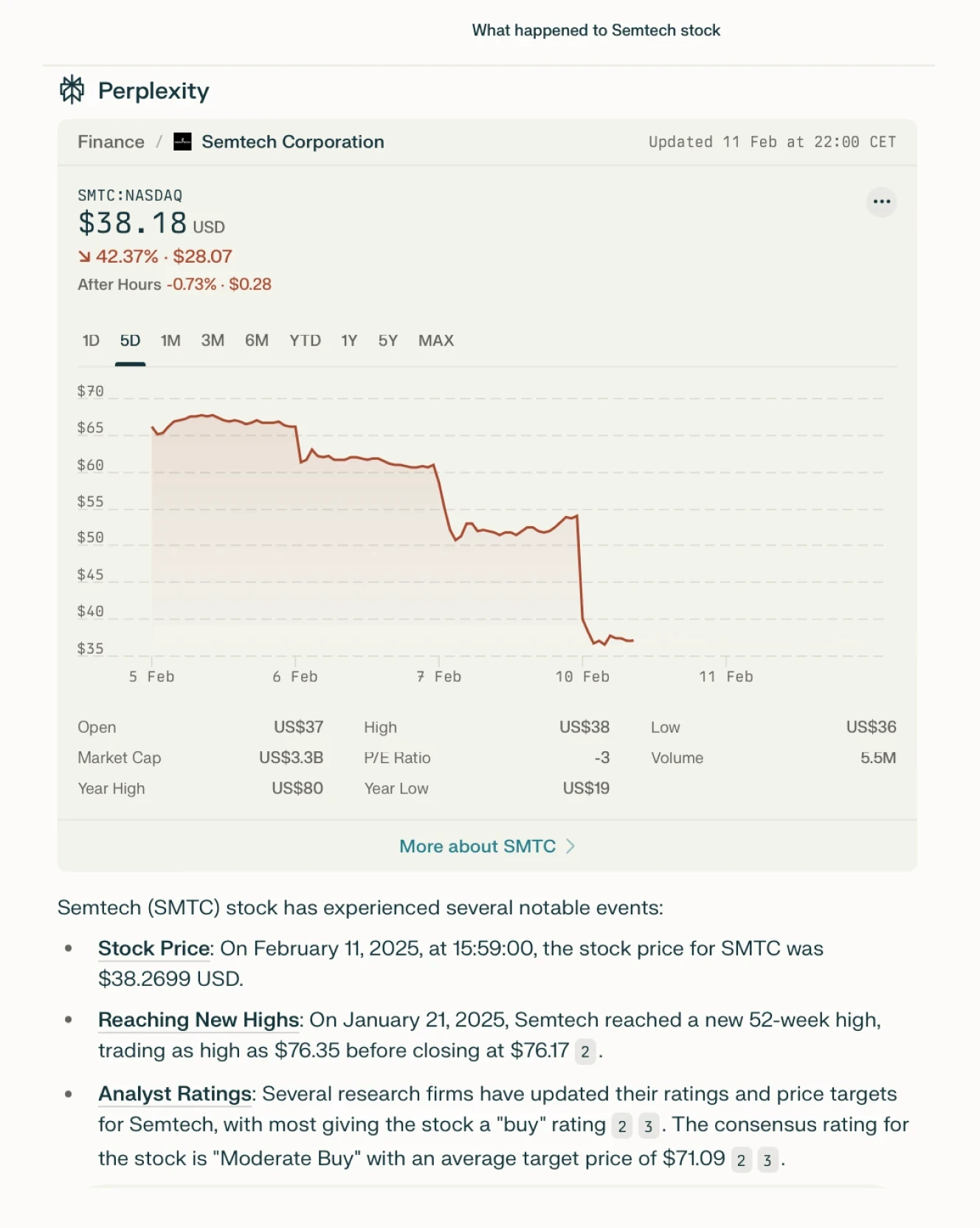

Perplexity AI has gained popularity for its ability to browse the web extensively, pulling in data from multiple sources while providing transparent citations. Unlike many AI search engines that obscure their references, Perplexity AI offers a list of visited sources, making it easier to verify the retrieved information. However, this transparency does not necessarily mean reliability.

One of its biggest weaknesses is its tendency to hallucinate responses. Even when sources are cited, the synthesis of information can be inaccurate, omitting key details or misinterpreting content. Users must manually verify all claims to ensure accuracy.

Pros:

Transparent source listing for easier verification

Pulls data from multiple sources for a broader perspective

Fast and efficient compared to traditional search engines

Cons:

Prone to hallucinations and misinterpretations

Limited number of free searches

Requires manual verification of information

Interestingly, Perplexity has faced legal challenges. In October 2024, Wall Street Journal and the New York Post filed a lawsuit against the company, alleging copyright infringement for reproducing news articles to generate responses, which they claim diverts traffic from publishers' websites.

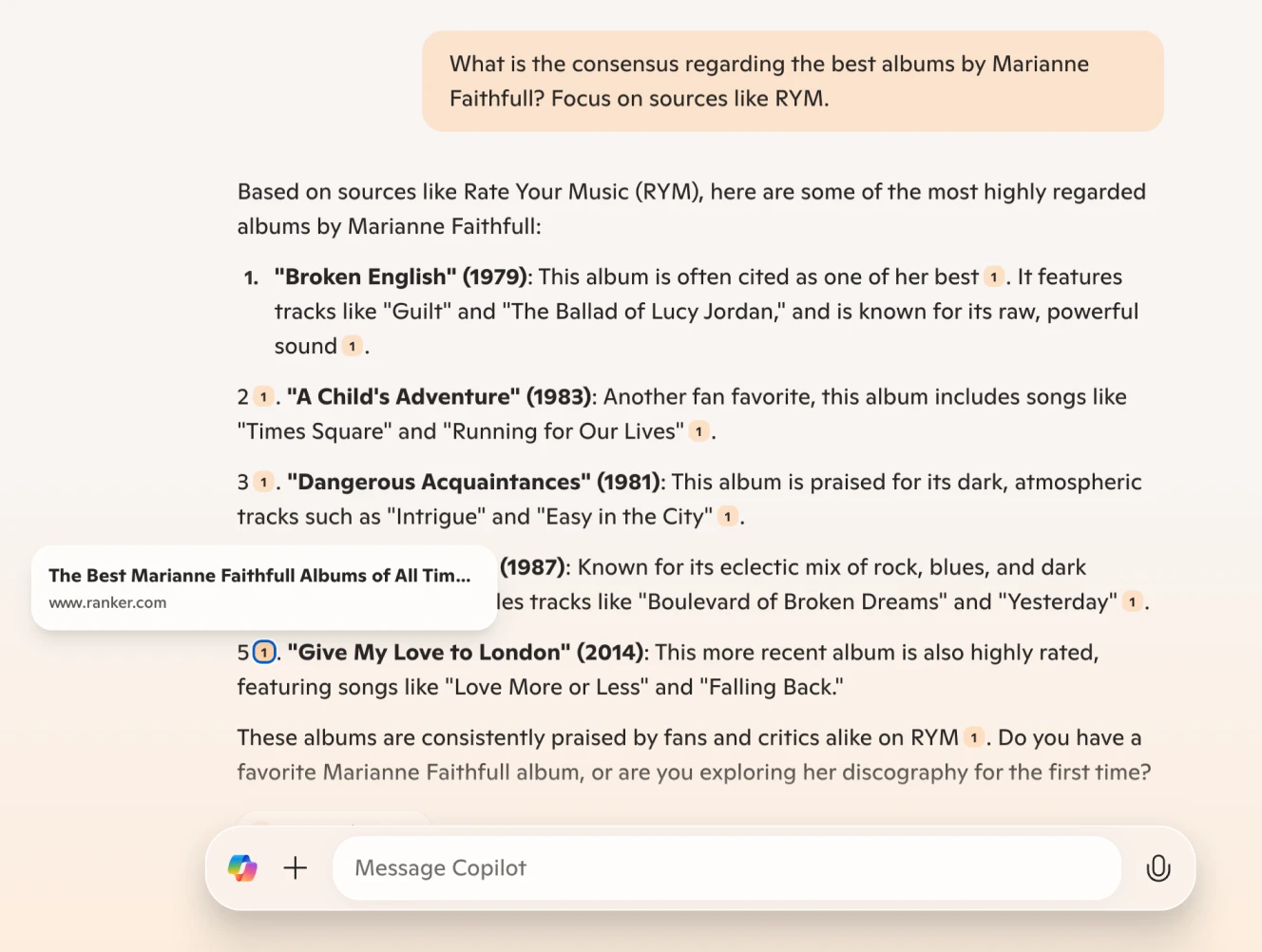

Copilot integrates Microsoft's AI-powered assistant directly into Bing search results, combining traditional search engine indexing with generative AI responses. Unlike Perplexity AI, which emphasizes transparency in sources, Bing’s AI-generated answers are often based on proprietary models with limited citation visibility. This can make it harder to verify the origins of the information.

Note that, although explicitly asked to visit Rate Your Music, Copilot used only one source (a different portal)

Bing with Copilot benefits from Microsoft's extensive web indexing capabilities, providing a wide breadth of information. However, its AI responses can be inconsistent—sometimes offering deep insights, while other times producing shallow summaries or misleadingly confident answers.

Additionally, Copilot's integration with Microsoft's ecosystem allows for contextual interactions across services like Edge and Office, making it a convenient tool for enterprise users. However, this tight integration also means that its capabilities can feel more restricted compared to independent AI search engines that offer greater customization.

Pros:

Good for generating quick summaries for general queries

Cons:

Doesn’t capture nuanced prompts and uses sources that might be too generic and broad

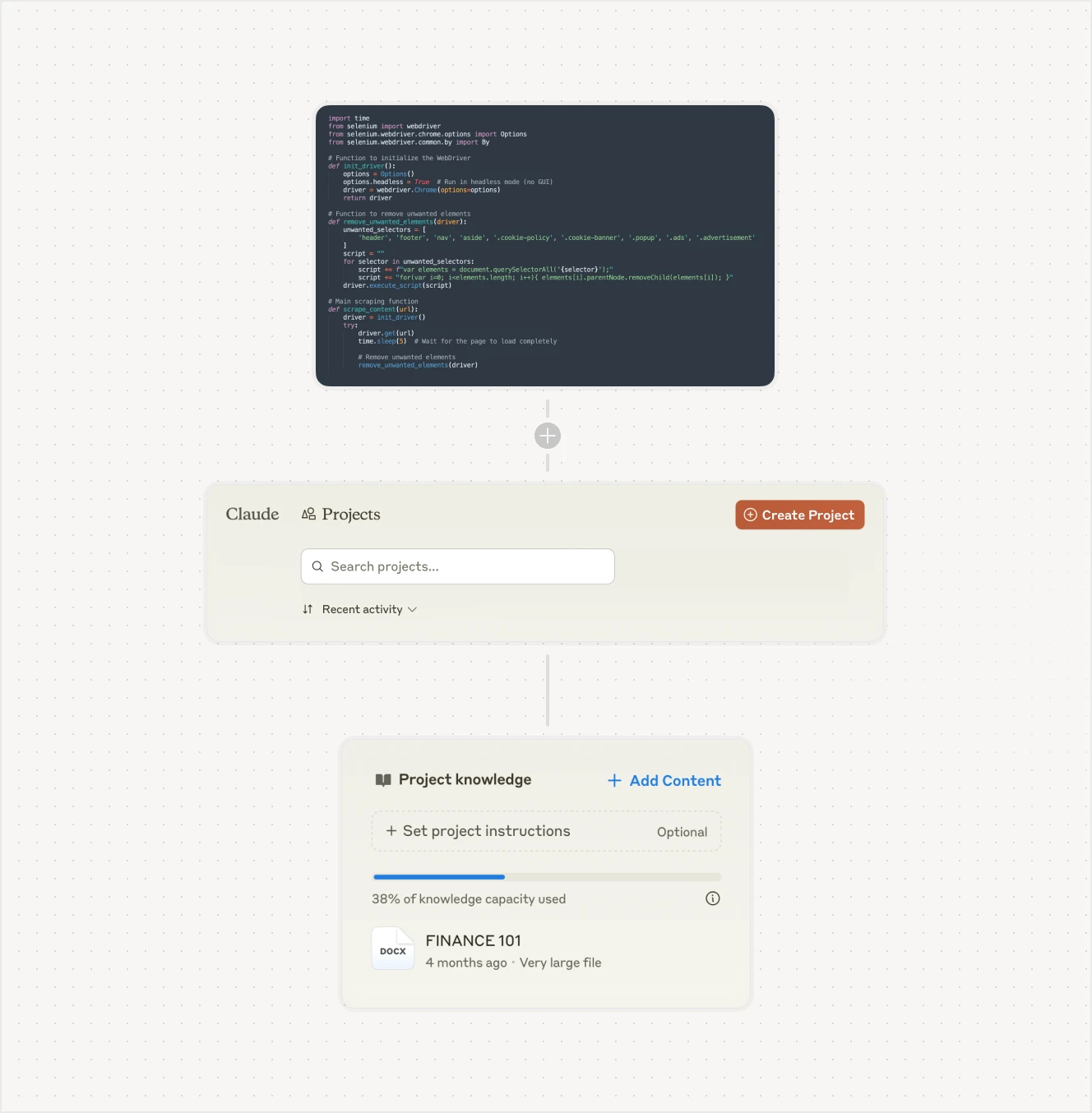

Is this a mistake? Does Claude even count as an "AI search" tool?

Claude doesn’t have native web browsing capabilities, but that doesn’t mean it can’t deliver impressive results when paired with the right tools. By using BeautifulSoup or Selenium to scrape a curated list of trusted URLs, you can build a tailored dataset that you can then upload into Claude via its Create Project feature. This two-step method might seem a bit roundabout, but if you already know which sources to trust, it often outperforms the hit-or-miss nature of standard AI searches.

In practice, you first scrape the web data, then load it into a new Claude’s Project. This allows the model to work exclusively with high-quality, pre-vetted content. The outcome is a more reliable, context-rich response that avoids the pitfalls of hallucinated or misquoted information often seen in other search approaches.

Pros:

Yields precise and trustworthy answers using curated data.

Grants full control over the source material, reducing irrelevant or inaccurate information.

Cons:

Involves manual setup and regular updates to your custom knowledge base(s) and each Project has a size limit.

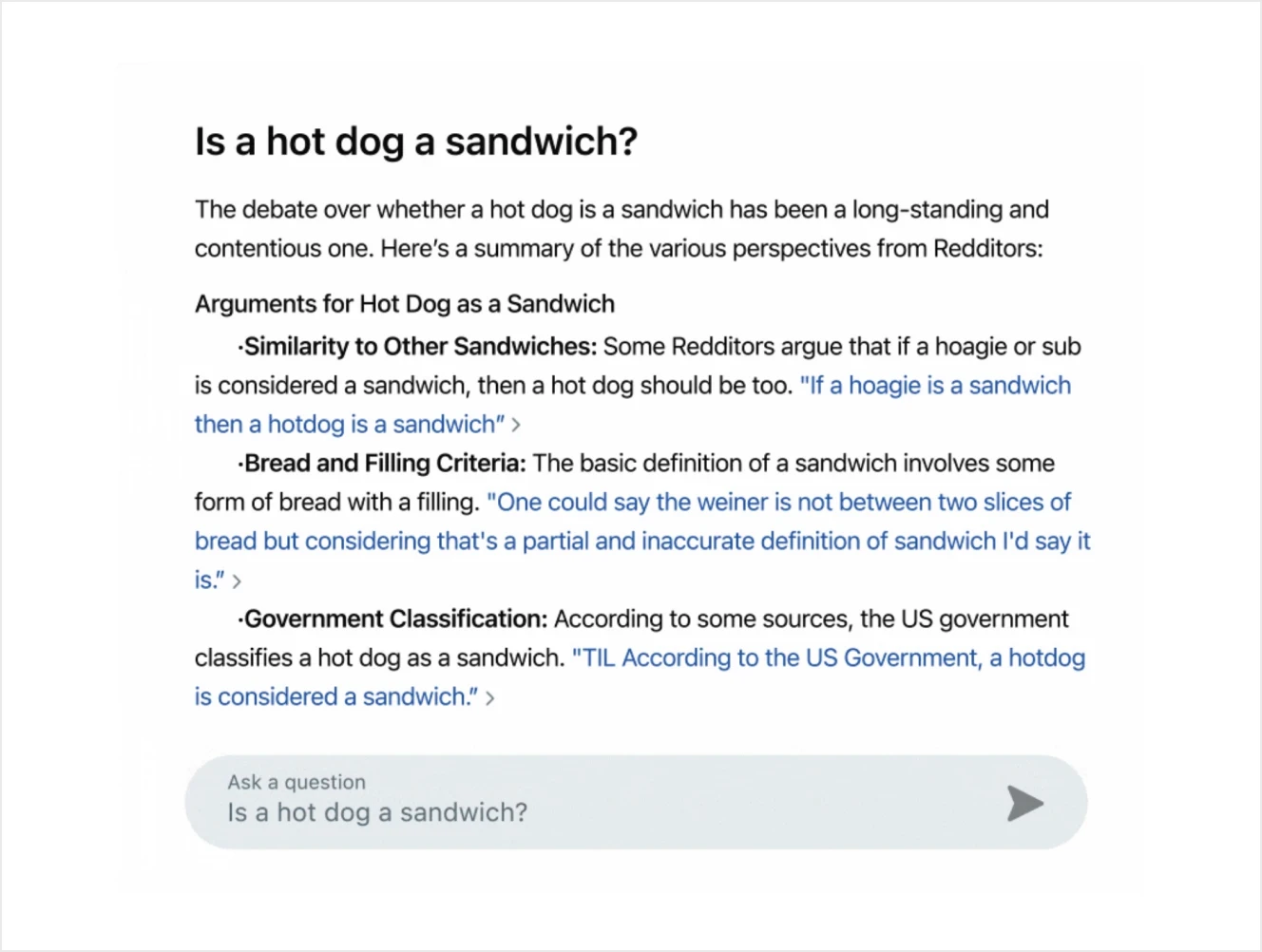

Reddit Answers is a new, generative AI search tool that taps into Reddit’s vast array of communities to deliver genuine human perspectives and advice. Instead of relying solely on algorithmic search, it scours real conversations to provide you with curated summaries, direct links to Reddit threads, and context-rich insights. Just type your question in plain language, and the system will sift through thousands of discussions to highlight the most relevant takeaways.

Currently in early access, Reddit Answers offers a fresh approach to finding information by bridging the gap between AI efficiency and human insight. It’s designed to go beyond traditional search results, helping you dive deeper into topics by connecting you with real Reddit voices and communities—perfect for those who want practical recommendations and honest opinions.

Pros:

Delivers authentic, community-sourced insights with direct links to original Reddit posts.

Features an intuitive conversational interface that simplifies complex discussions.

Leverages the collective knowledge of diverse Reddit communities for real-life advice.

Cons:

Early access means availability and functionality may be limited.

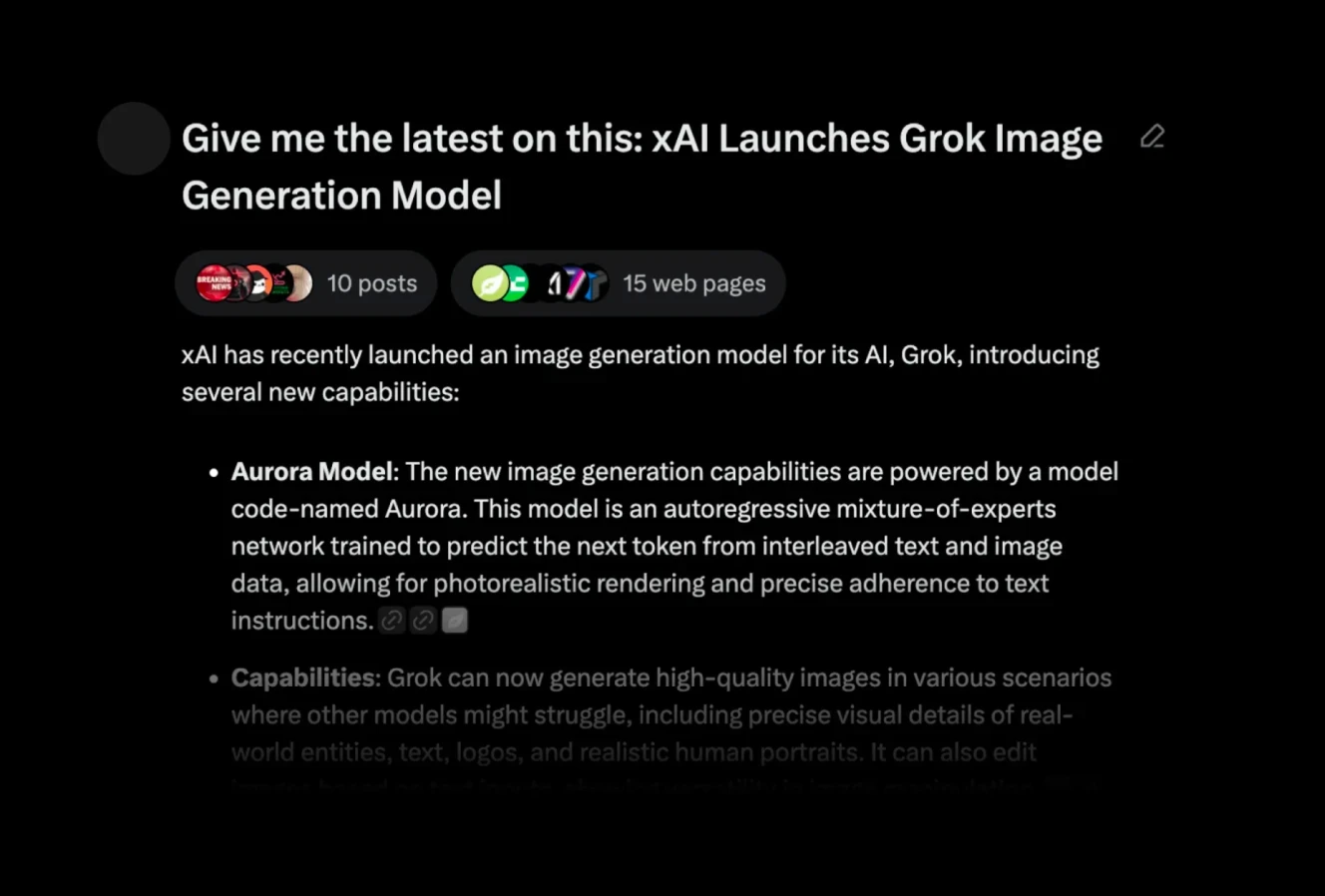

Grok AI is to X (formerly Twitter) what LLama is to Meta. It functions as an all-purpose LLM model while also serving as a solid AI search engine for extracting insights from X, much like Reddit Answers does for Reddit. Grok processes conversations, trending topics, and key voices on the platform to deliver clear, concise summaries with links to the original tweets.

Grok is particularly useful for those looking to monitor public sentiment or track emerging discussions on social media. By streamlining the process of identifying and summarizing relevant content, it offers a more focused view of what’s happening on X.

Pros:

Efficiently distills key trends and conversations from X.

Provides direct links to original tweets for deeper context.

Cons:

It is developed by X.

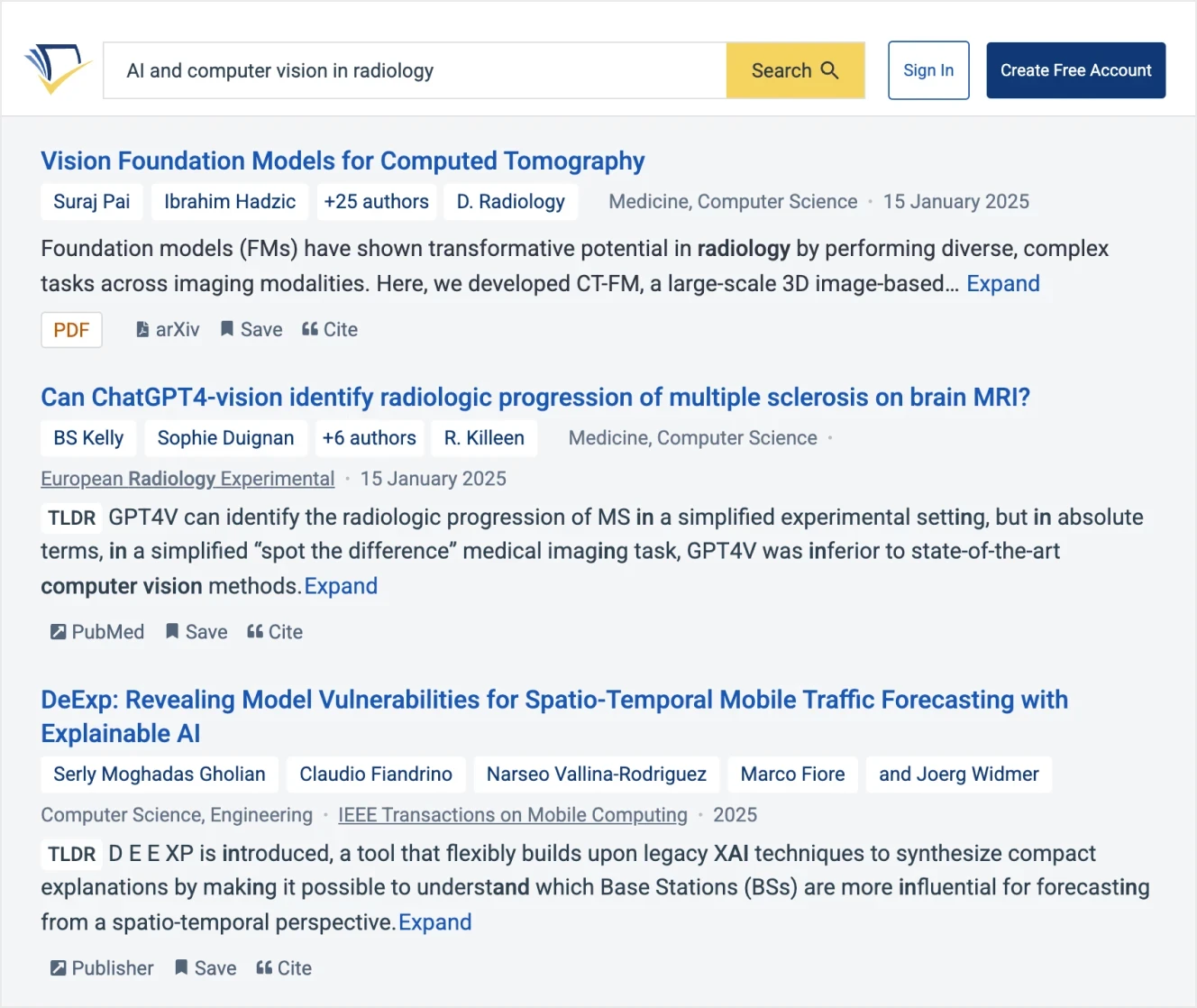

Semantic Scholar is an AI tool built for exploring academic literature rather than for summarizing or synthesizing research findings. Developed by the Allen Institute for AI, it helps researchers perform preliminary searches by sifting through millions of scholarly articles to surface relevant, high-quality papers.

This tool uses vector databases and embeddings to assess the semantic similarity between topics, rather than relying on simple keyword matching. It can uncover relationships and conceptual similarities that traditional search methods often miss. This means you get results based on genuine semantic closeness—capturing nuanced research themes—even if the exact keywords aren’t present.

This advanced approach is especially useful for interdisciplinary research or emerging fields where terminology isn’t standardized. By focusing on the underlying meaning and context of articles, Semantic Scholar helps you discover relevant work that might otherwise be overlooked.

Pros:

Efficiently identifies key academic papers and influential studies.

Provides advanced filtering and citation insights for preliminary research.

Cons:

Designed for exploration rather than delivering detailed summaries or synthesized insights.

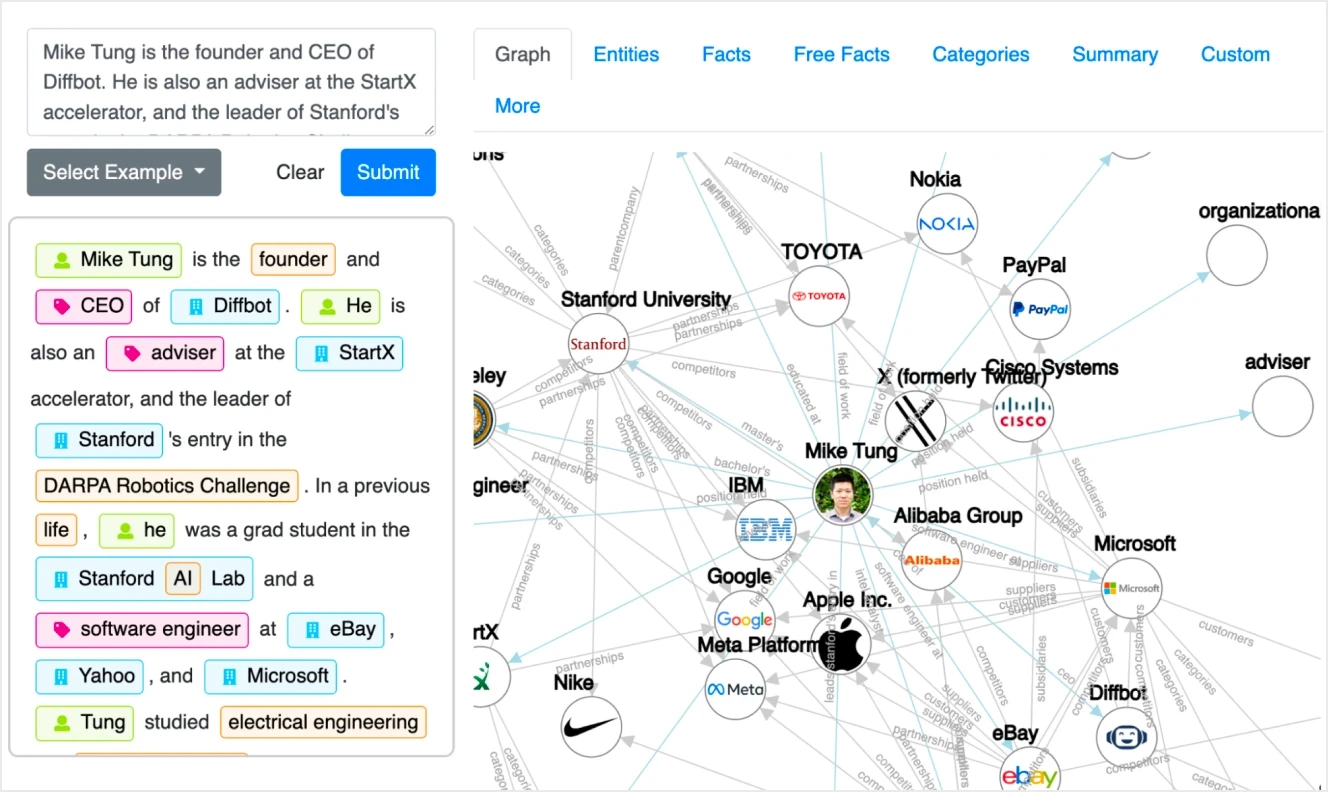

Diffbot transforms unstructured web content into structured data at scale. If you need to extract information that requires visiting hundreds or thousands of websites—rather than just performing a basic search across a few sources—Diffbot might be a much better choice than ChatGPT Search. It automatically parses websites, identifies key elements like articles, products, and images, and organizes this information. This makes it easier to access and analyze vast amounts of online content without manually sifting through individual web pages.

Designed to handle the complexities of modern websites—from inconsistent markup to dynamic content—Diffbot is among some of the best AI data extraction tools for tasks such as market research, competitive intelligence, and data aggregation.

Pros:

Automatically converts unstructured web data into organized, actionable insights.

Leverages advanced AI techniques to handle complex website content.

Cons:

More of a traditional RPA and web crawling tool rather than AI in the modern sense of the term—if you expect generative AI functionalities you might be disappointed.

Requires an initial configuration to optimize for specific use cases.

Which AI Search Engine Should You Use?

The choice of AI search engine ultimately depends on your specific needs and use case. Here's how to think about your options:

For Professional Research and High-Stakes Operations: V7 Go stands out for complex business tasks requiring accuracy and transparency. Its configurable AI agents and systematic approach make it ideal for financial analysis, due diligence, and other situations where precision is crucial. The ability to track every step of the reasoning process and verify sources makes it particularly valuable for professional settings.

For Academic Research: Semantic Scholar can be used for discovering relevant academic papers, while Claude paired with BeautifulSoup offers precise results when working with pre-vetted sources. Deep Research by OpenAI can be a powerful option for comprehensive literature reviews, though its high subscription cost may be a limiting factor.

For Social Media Insights: Reddit Answers and Grok AI are specialized tools for extracting insights from specific platforms. They're particularly useful for understanding public sentiment, tracking discussions, or gathering real-world experiences and opinions.

For Quick, General Queries: ChatGPT's web search and Perplexity AI work well for basic information gathering, though results should be verified. Bing with Copilot provides decent summaries for general queries but may miss nuanced details.

Best Practices for Using AI Search:

Always verify critical information, especially quotes and statistics, regardless of which tool you use

Consider using multiple tools for important research to cross-reference findings

Take advantage of specialized tools for specific domains (academic, social media, etc.)

Pay attention to source transparency and maintain healthy skepticism about AI-generated syntheses

For business-critical applications, prioritize tools that offer control over the search process and transparent reasoning

The future of AI search engines lies not in their ability to provide quick answers, but in their capacity to serve as intelligent research assistants that complement human expertise. As these tools continue to evolve, the focus should be on developing more transparent, controllable, and verifiable search processes rather than just faster or more confident responses.

AI search engines are powerful tools, but they're not magic. The best results come from understanding their limitations and using them strategically as part of a broader research methodology.