Computer vision

Segment Anything Model (SAM): Intro, Use Cases, V7 Tutorial

11 min read

—

Oct 31, 2023

In this article, we'll delve into the origins, core principles, and far-reaching applications of SAM—and offer a glimpse into its profound impact on the future of computer vision.

Guest Author

Segment Anything Model (SAM) is a part of MetaAI’s Segment Anything project, whose goal has been to revolutionize segmentation model building. With its promise of “reducing the need for task-specific modeling expertise, training compute, and custom data annotation,” SAM holds the potential to transform how we perceive and interact with visual data across different use cases.

In this article, we’ll provide SAM’s technical breakdown, take a look at its current use cases, and talk about its impact on the future of computer vision.

Here’s what we’ll cover:

What is the Segment Anything Model?

SAM’s network architecture

How does SAM support real-life cases?

How to use SAM for AI-assisted labeling? V7 tutorial

What does the future hold for SAM?

V7 integrates with SAM, allowing you to label your data quicker than ever before. Learn more here, or try it out yourself

What is Segment Anything Model (SAM)?

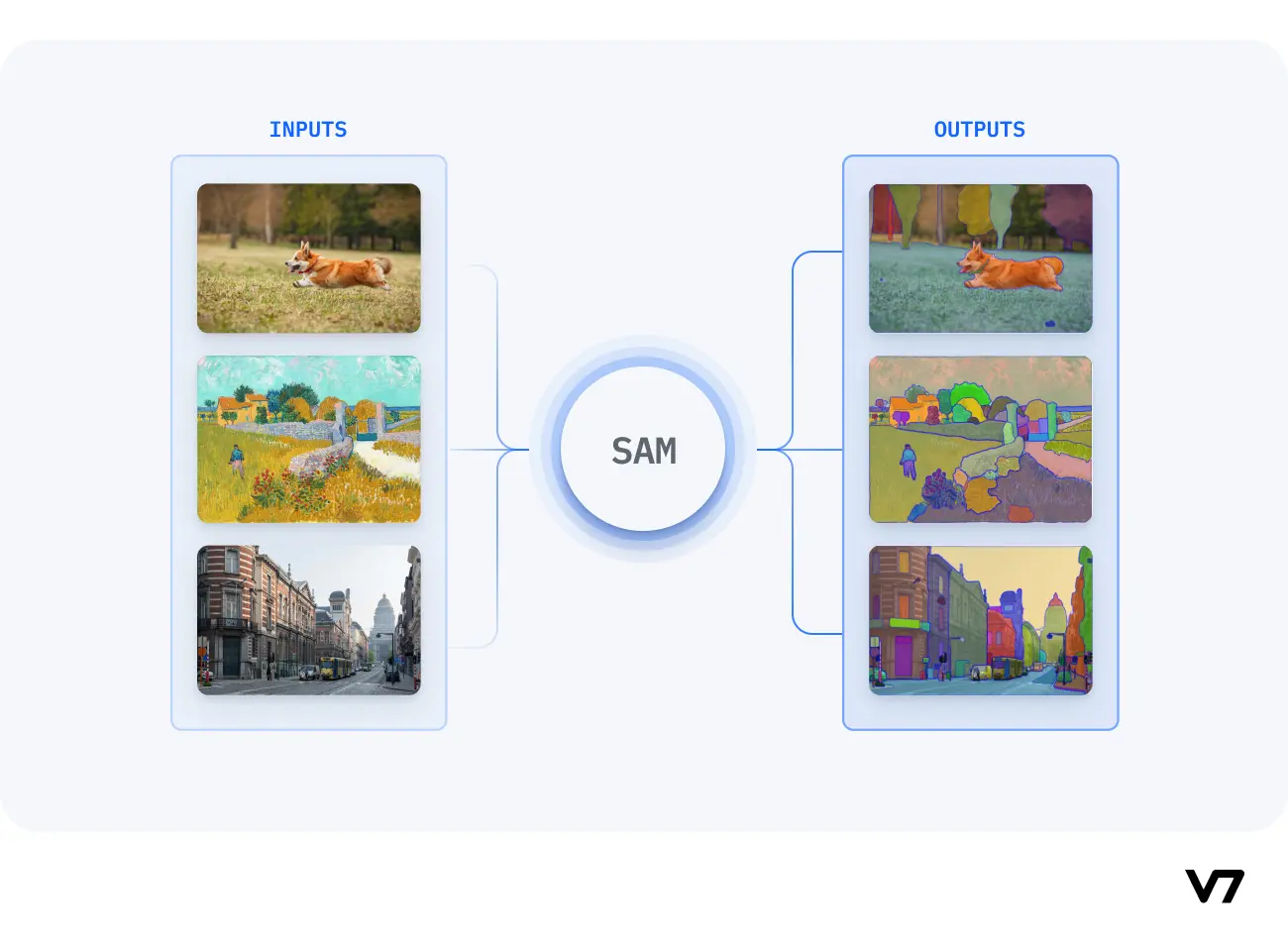

SAM is designed to revolutionize the way we approach image analysis by providing a versatile and adaptable foundation model for segmenting objects and regions within images.

Unlike traditional image segmentation models that require extensive task-specific modeling expertise, SAM eliminates the need for such specialization. Its primary objective is to simplify the segmentation process by serving as a foundational model that can be prompted with various inputs, including clicks, boxes, or text, making it accessible to a broader range of users and applications.

What sets SAM apart is its ability to generalize to new tasks and image domains without the need for custom data annotation or extensive retraining. SAM accomplishes this by being trained on a diverse dataset of over 1 billion segmentation masks, collected as part of the Segment Anything project. This massive dataset enables SAM to adapt to specific segmentation tasks, similar to how prompting is used in natural language processing models.

SAM's versatility, real-time interaction capabilities, and zero-shot transfer make it an invaluable tool for various industries, including content creation, scientific research, augmented reality, and more, where accurate image segmentation is a critical component of data analysis and decision-making processes.

SAM's network architecture

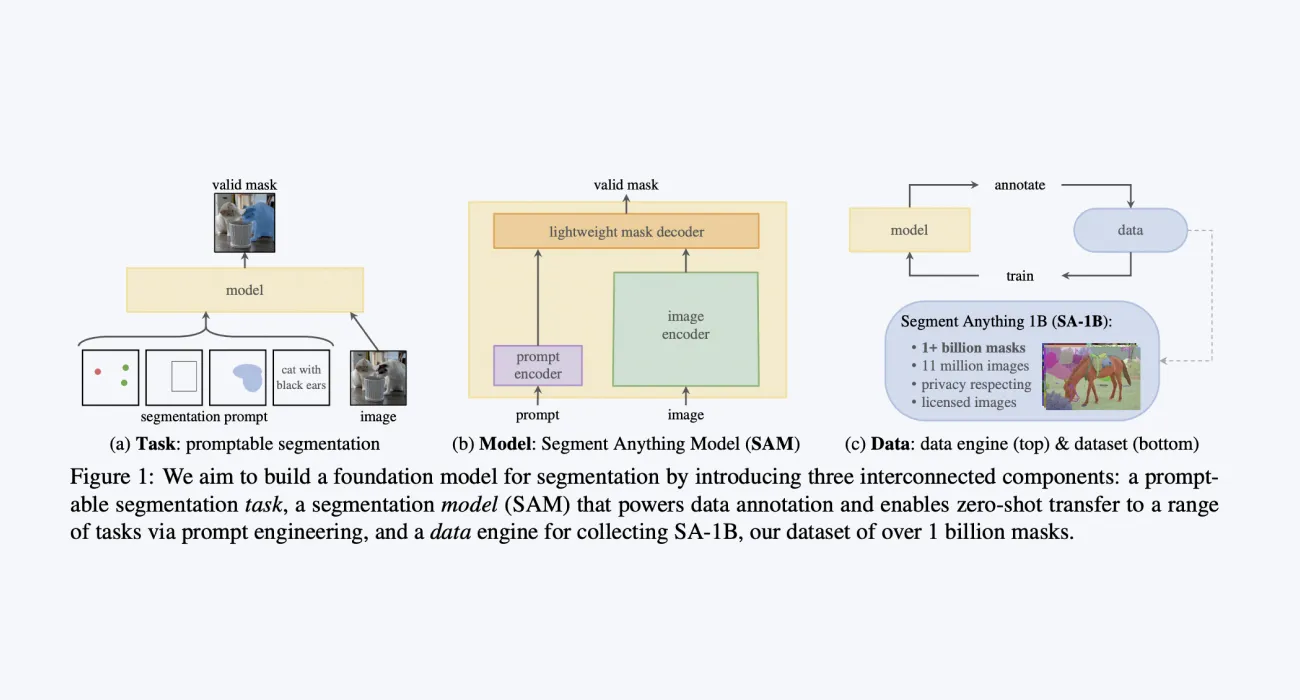

At the heart of the Segment Anything Model (SAM) lies a meticulously crafted network architecture designed to revolutionize the field of computer vision and image segmentation. SAM's design is rooted in three fundamental components: the task, model, and dataset. These components work in harmony to empower SAM with the capability to perform real-time image segmentation with remarkable versatility and accuracy.

SAM's network architecture consists of three main components:

Task Component: Defines user interactions and segmentation tasks through prompts, accommodating a wide range of real-world scenarios.

Model Component: Employs an image encoder, a prompt encoder, and a lightweight decoder to swiftly and accurately generate segmentation masks.

Dataset Component: Relies on the Segment Anything 1-Billion mask dataset (SA-1B), with over 1 billion masks, to teach SAM generalized capabilities without extensive retraining.

Together, these interconnected components form the bedrock of SAM's architecture, empowering it to address a myriad of image segmentation challenges and real-world applications with unmatched flexibility and precision. In the sections that follow, we will delve deeper into each of these components to unravel the inner workings of SAM.

SAM design elements

SAM's task and model design elements work together to make image segmentation accessible and versatile. The task design ensures that users can communicate their segmentation needs effectively, while the model design leverages state-of-the-art techniques to provide accurate and rapid segmentation results.

Task design

SAM's task design element defines how the model interacts with and performs image segmentation tasks. Its primary goal is to make the segmentation process as flexible, adaptable, and user-friendly as possible.

Here are key aspects of SAM's task design:

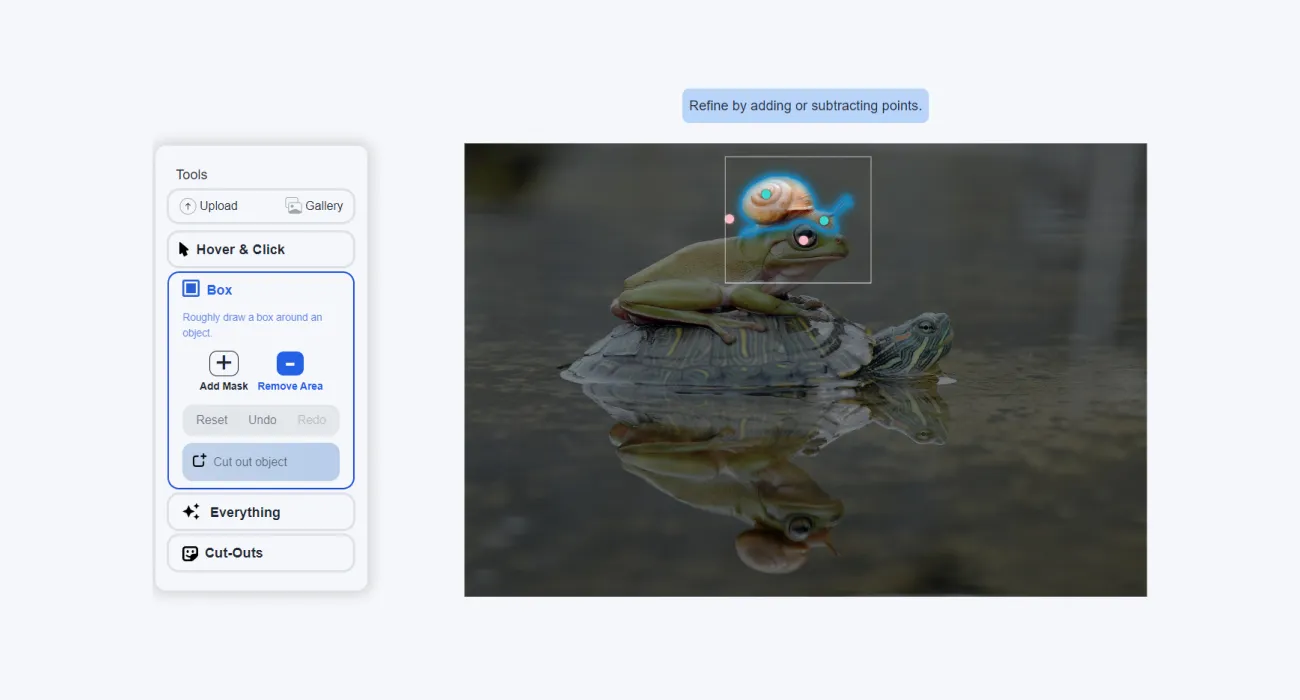

Promptable interface: SAM's task design revolves around a "promptable interface." This means that users can provide prompts to the model in various forms, such as clicks, boxes, freeform text, or any information indicating what to segment in an image. This versatility allows users to specify the segmentation task according to their needs.

Interactive segmentation: SAM supports interactive segmentation, allowing users to provide real-time guidance to refine masks. Users can interactively click on points to include or exclude from the object, draw bounding boxes, or provide textual descriptions, making the segmentation process more intuitive.

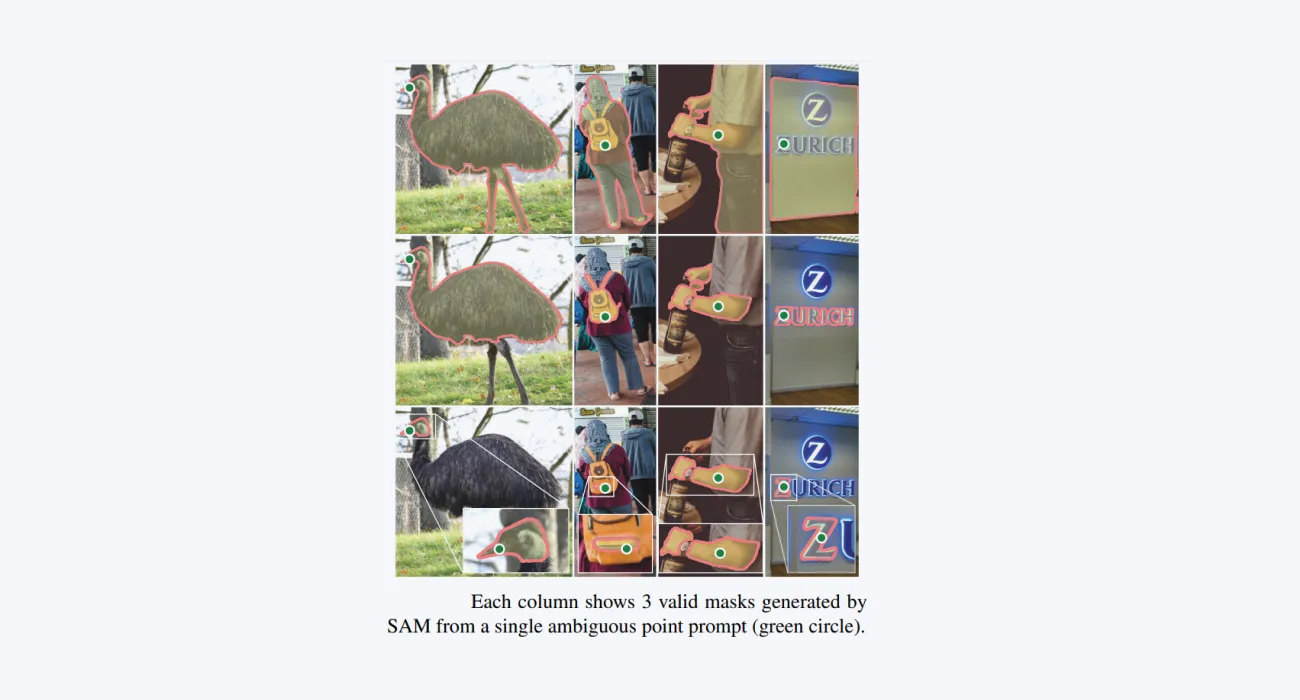

Adaptive to ambiguity: SAM's task design accounts for cases where prompts might be ambiguous, potentially referring to multiple objects. Despite this ambiguity, SAM aims to generate a reasonable mask for one of the possible interpretations, ensuring usability even in challenging scenarios.

Real-time processing: SAM's task design includes real-time processing capabilities. After precomputing the image embedding, SAM can generate segmentation masks swiftly in just 50 milliseconds, enabling real-time interaction with the model.

Model design

SAM's model design is the architectural foundation that enables it to perform image segmentation tasks effectively and efficiently.

Here are key aspects of SAM's model design:

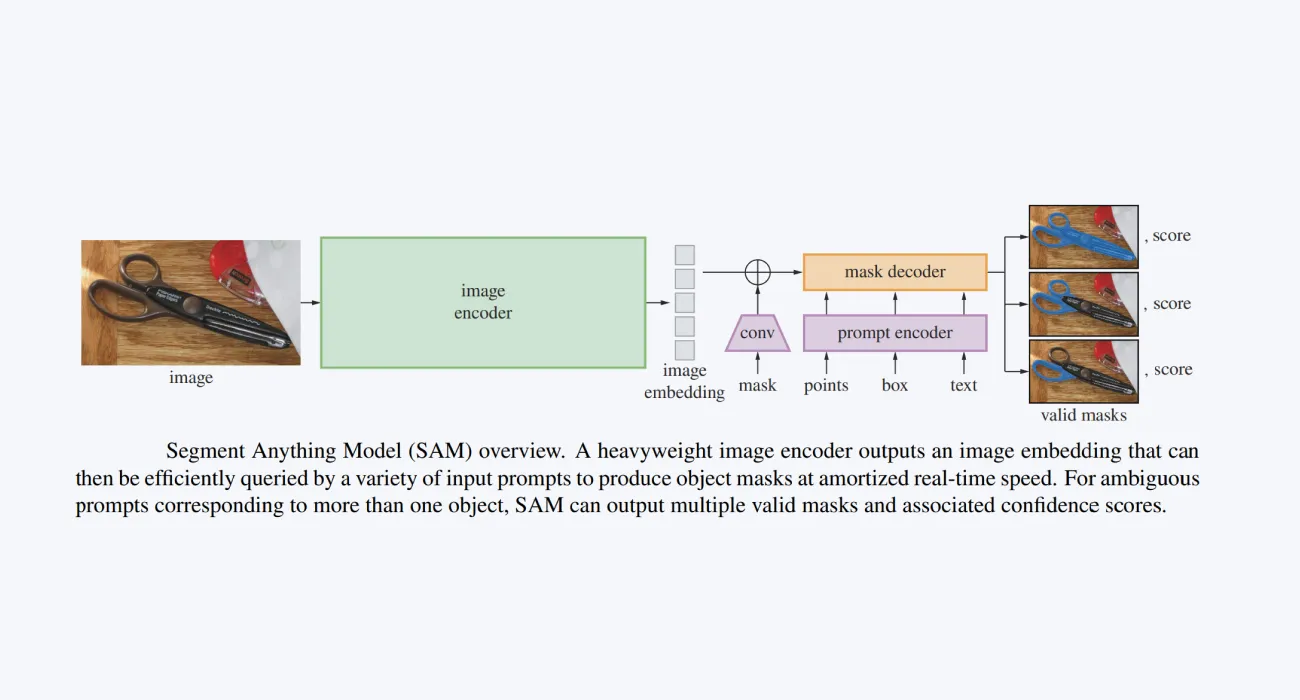

Image encoder: The image encoder produces a one-time embedding for the input image and extracts its essential features, which serves as the basis for subsequent segmentation.

Prompt encoder: SAM's model incorporates a lightweight prompt encoder that converts user prompts into embedding vectors in real time. This prompt encoder interprets various prompt formats, such as clicks, boxes, or text, and converts them into a format the model can understand.

Decoding for segmentation masks: the lightweight decoder is responsible for predicting segmentation masks. It combines information from both the image embedding and the prompt embedding to generate accurate masks that identify the object or region specified by the user.

Efficient runtime: SAM's model is designed to operate efficiently, particularly at runtime. It can run on a CPU in a web browser, allowing users to interact with the model in real time. This runtime efficiency is a critical factor in SAM's usability.

Data engine and dataset

The data engine of the Segment Anything Model (SAM) is a crucial component responsible for creating and curating the vast and diverse dataset known as SA-1B, which plays a pivotal role in SAM's training and its ability to generalize to new tasks and domains. This data engine incorporates various gears or stages to efficiently collect and enhance the dataset:

Interactive Annotation with Model Assistance (First Gear): In this initial stage, human annotators actively engage with SAM to interactively annotate images. They use the model to assist them in the annotation process, leveraging SAM's capabilities to segment objects. This stage ensures that the dataset's initial annotations are high-quality and informative.

Combination of Automatic and Assisted Annotation (Second Gear): To increase the diversity of collected masks, the second gear of the data engine employs a mix of fully automatic annotation and assisted annotation. SAM assists in generating segmentation masks, but the process also includes some level of automatic annotation. This combination helps enhance the dataset's breadth and variety.

Fully Automatic Mask Creation (Third Gear): The final gear of the data engine involves fully automatic mask creation. This stage allows the dataset to scale significantly, as SAM generates segmentation masks without human intervention. This automation enables the dataset to expand rapidly while maintaining data quality.

By incorporating these gears, the data engine efficiently produces a massive and diverse dataset of over 1.1 billion segmentation masks collected from approximately 11 million licensed and privacy-preserving images. The iterative process of updating SAM with new annotations and improving both the model and the dataset ensures that SAM becomes increasingly proficient in various segmentation tasks.

SAM’s structure: An overview

SAM consists of three components:

an image encoder

a flexible prompt encoder

a fast mask decoder

Image encoder

Motivated by scalability and powerful pre-training methods, SAM uses a Masked Autoencoder (MAE) pre-trained Vision Transformer (ViT) minimally adapted to process high resolution inputs. The image encoder runs once per image and can be applied prior to prompting the model.

Prompt encoder

SAM considers two sets of prompts: sparse (points, boxes, text) and dense (masks). SAM represents points and boxes by positional encodings summed with learned embeddings for each prompt type and free-form text with an off-the-shelf text encoder from CLIP. Dense prompts (i.e., masks) are embedded using convolutions and summed element-wise with the image embedding.

Mask decoder

The mask decoder efficiently maps the image embedding, prompt embeddings, and an output token to a mask. This design employs a modification of a Transformer decoder block followed by a dynamic mask prediction head.

SAM’s modified decoder block uses prompt self-attention and cross-attention in two directions (prompt-to-image embedding and vice-versa) to update all embeddings. After running two blocks, SAM upsamples the image embedding and an MLP maps the output token to a dynamic linear classifier, which then computes the mask foreground probability at each image location.

Segment Anything 1-Billion Mask Dataset

Training a model like SAM requires a massive and diverse dataset, which was not readily available when the project began. To address this challenge, the team behind SAM developed the SA-1B dataset, which consists of over 1.1 billion high-quality segmentation masks collected from approximately 11 million licensed and privacy-preserving images.

The dataset creation process involved a combination of interactive and automatic annotation methods, significantly speeding up the data collection process compared to manual annotation efforts. This dataset's scale is unparalleled, surpassing any existing segmentation dataset by a wide margin.

How does SAM support real-life use cases?

Versatile segmentation: SAM's promptable interface allows users to specify segmentation tasks using various prompts, making it adaptable to diverse real-world scenarios.

For example, SAM's versatile segmentation capabilities find application in environmental monitoring, where it can analyze ecosystems, detect deforestation, track wildlife, and assess land use. For wetland monitoring, SAM can segment aquatic vegetation and habitats. In deforestation detection, it can identify areas of forest loss. In wildlife tracking, it can help analyze animal behavior, and in land use analysis, it can categorize land use in aerial imagery. SAM's adaptability enables valuable insights for conservation, urban planning, and environmental research.

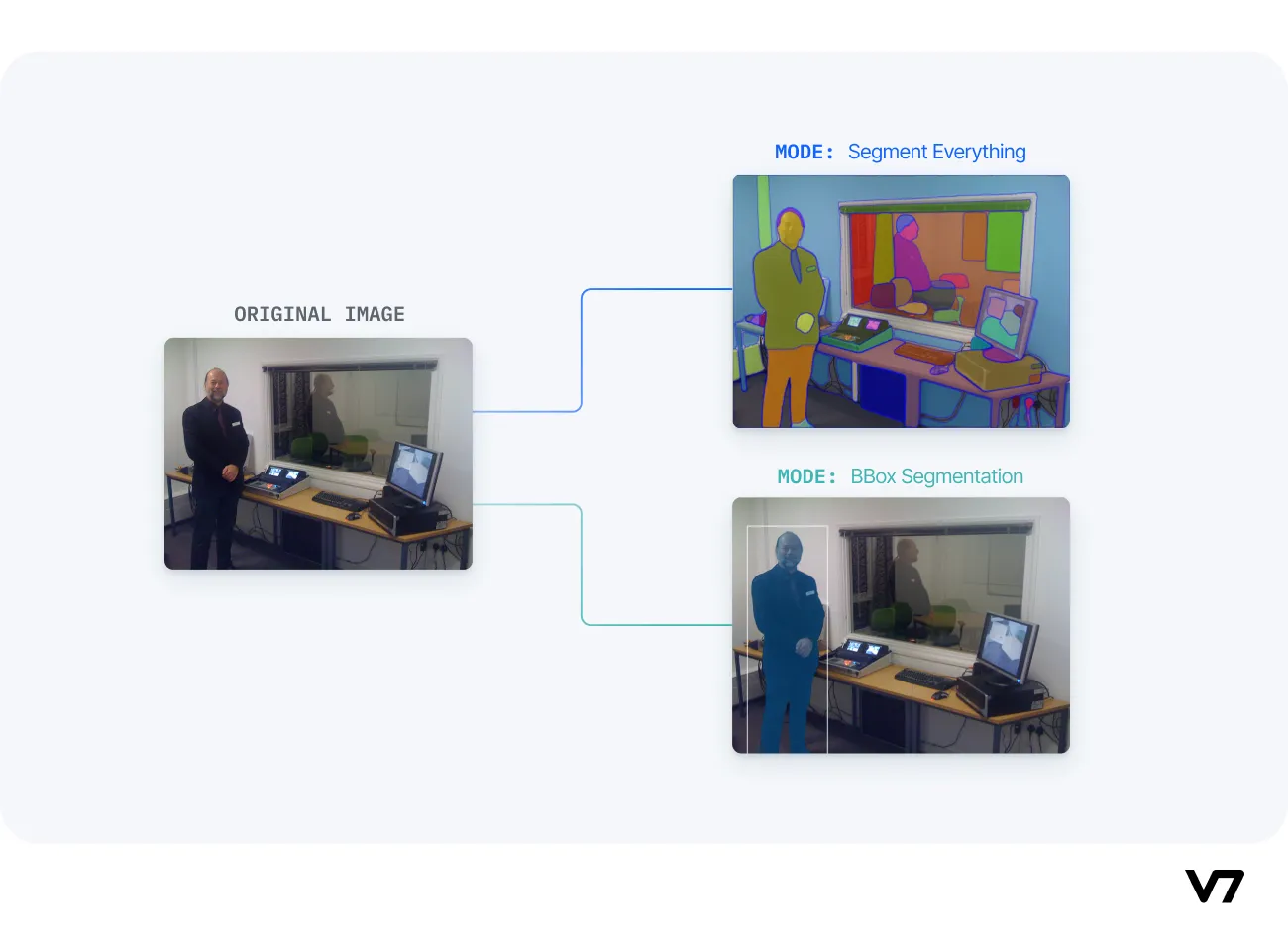

SAM can be asked to segment everything in an image, or it can be provided with a bounding box to segment a particular object in the image, as shown below on an example from the COCO dataset.

Original image taken from the MSCOCO dataset; segmentation performed using SAM demo

Zero-Shot Transfer: SAM's ability to generalize to new objects and image domains without additional training (zero-shot transfer) is invaluable in real-life applications. Users can apply SAM "out of the box" to new image domains, reducing the need for task-specific models.

Zero-shot transfer in SAM can streamline fashion retail by enabling e-commerce platforms to effortlessly introduce new clothing lines. SAM can instantly segment and present new fashion items without requiring specific model training, ensuring a consistent and professional look for product listings. This accelerates the adaptation to fashion trends, making online shopping experiences more engaging and efficient.

Real-Time Interaction: SAM's efficient architecture enables real-time interaction with the model. This is crucial for applications like augmented reality, where users need immediate feedback, or content creation tasks that require rapid segmentation.

Multimodal Understanding: SAM's promptable segmentation can be integrated into larger AI systems for more comprehensive multimodal understanding, such as interpreting both text and visual content on webpages.

Efficient Data Annotation: SAM's data engine accelerates the creation of large-scale datasets, reducing the time and resources required for manual data annotation. This benefit extends to researchers and developers working on their own segmentation tasks.

Equitable Data Collection: SAM's dataset creation process aims for better representation across diverse geographic regions and demographic groups, making it more equitable and suitable for real-world applications that involve varied populations.

Content Creation and AR/VR: SAM's segmentation capabilities can enhance content creation tools by automating object extraction for collages or video editing. In AR/VR, it enables object selection and transformation, enriching the user experience.

Scientific Research: SAM's ability to locate and track objects in videos has applications in scientific research, from monitoring natural occurrences to studying phenomena in videos, offering insights and advancing various fields.

Overall, SAM's versatility, adaptability, and real-time capabilities make it a valuable tool for addressing real-life image segmentation challenges across diverse industries and applications.

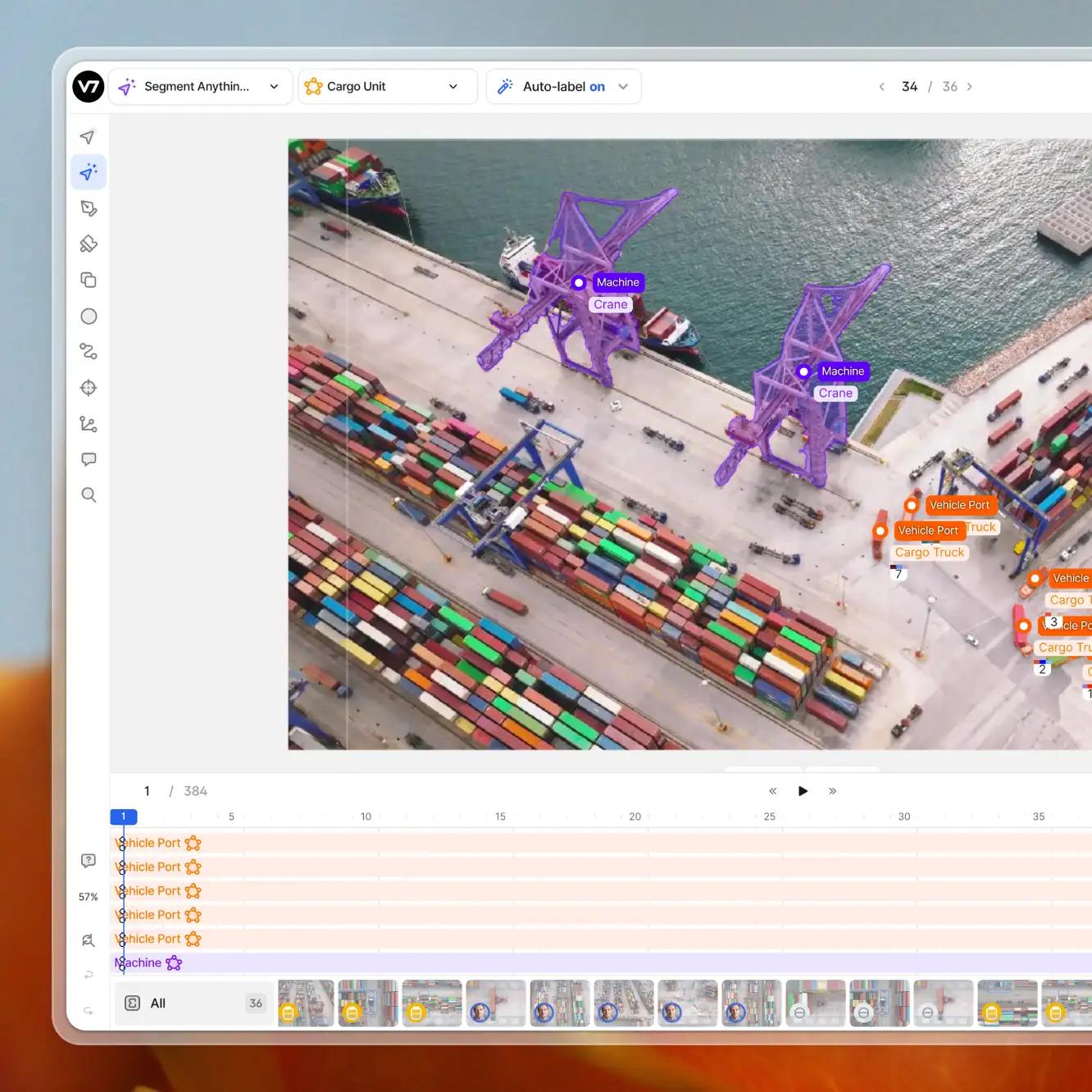

How to use SAM for AI-assisted labeling? V7 tutorial

V7 integrates with sIn combination with V7's Workflows, you can effectively make use of SAM to increase the speed of segmentation without sacrificing labeling quality.

SAM is also the primary engine for V7’s Auto-Annotate tool.

Here’s how it works in practice:

Check out the documentation for more information on the integration.

What does the future hold for SAM?

In conclusion, SAM stands as a groundbreaking advancement in the realm of image segmentation, ushering in a new era of accessibility, efficiency, and versatility. Its remarkable ability to generalize to new tasks and domains signifies a paradigm shift in how we approach image analysis.

By simplifying the segmentation process and reducing the need for task-specific models, SAM empowers users across diverse industries to tackle image segmentation challenges with unprecedented ease.

As we look ahead, the concept of composition, driven by techniques like prompt engineering, emerges as a powerful tool, allowing SAM to adapt to tasks yet unknown at the time of its design. This opens doors to a world of possibilities, where SAM's composable system design can cater to an even wider array of applications, transcending the constraints of fixed-task systems. The future holds immense promise as SAM continues to redefine the boundaries of image segmentation and multimodal understanding.