Computer vision

COCO Dataset: All You Need to Know to Get Started

13 min read

—

Jan 19, 2023

COCO dataset is commonly used in machine learning—both for research and practical applications. Let's dive deeper into the COCO dataset and its significance for computer vision tasks.

Guest Author

Artificial intelligence relies on data. The process of building and deploying AI and machine learning systems requires large and diverse data sets.

The variability and quality of the data play a crucial role in determining the capabilities and accuracy of machine learning models. Only highly qualitative data will guarantee efficient performance. One of the easiest ways to obtain high-quality data is by using pre-existing, well-established benchmark datasets.

A benchmark dataset commonly used in machine learning—both for research and practical applications—is the COCO dataset.

In this article, we’ll dive deeper into the COCO dataset and its significance for computer vision tasks.

Here’s what we’ll cover:

What is the COCO dataset?

How to use MS COCO?

COCO dataset formats

What is the COCO dataset?

The COCO (Common Objects in Context) dataset is a large-scale image recognition dataset for object detection, segmentation, and captioning tasks. It contains over 330,000 images, each annotated with 80 object categories and 5 captions describing the scene. The COCO dataset is widely used in computer vision research and has been used to train and evaluate many state-of-the-art object detection and segmentation models.

The dataset has two main parts: the images and their annotations.

The images are organized into a hierarchy of directories, with the top-level directory containing subdirectories for the train, validation, and test sets.

The annotations are provided in JSON format, with each file corresponding to a single image.

Each annotation in the dataset includes the following information:

Image file name

Image size (width and height)

List of objects with the following information: Object class (e.g., "person," "car"); Bounding box coordinates (x, y, width, height); Segmentation mask (polygon or RLE format); Keypoints and their positions (if available)

Five captions describing the scene

The COCO dataset also provides additional information, such as image super categories, license, and coco-stuff (pixel-wise annotations for stuff classes in addition to 80 object classes).

MS COCO offers various types of annotations,

Object detection with bounding box coordinates and full segmentation masks for 80 different objects

Stuff image segmentation with pixel maps displaying 91 amorphous background areas

Panoptic segmentation identifies items in images based on 80 "things" and 91 "stuff" categories

Dense pose with over 39,000 photos featuring over 56,000 tagged persons with a mapping between pixels and a template 3D model and natural language descriptions for each image

Keypoint annotations for over 250,000 persons annotated with key points such as the right eye, nose, and left hip

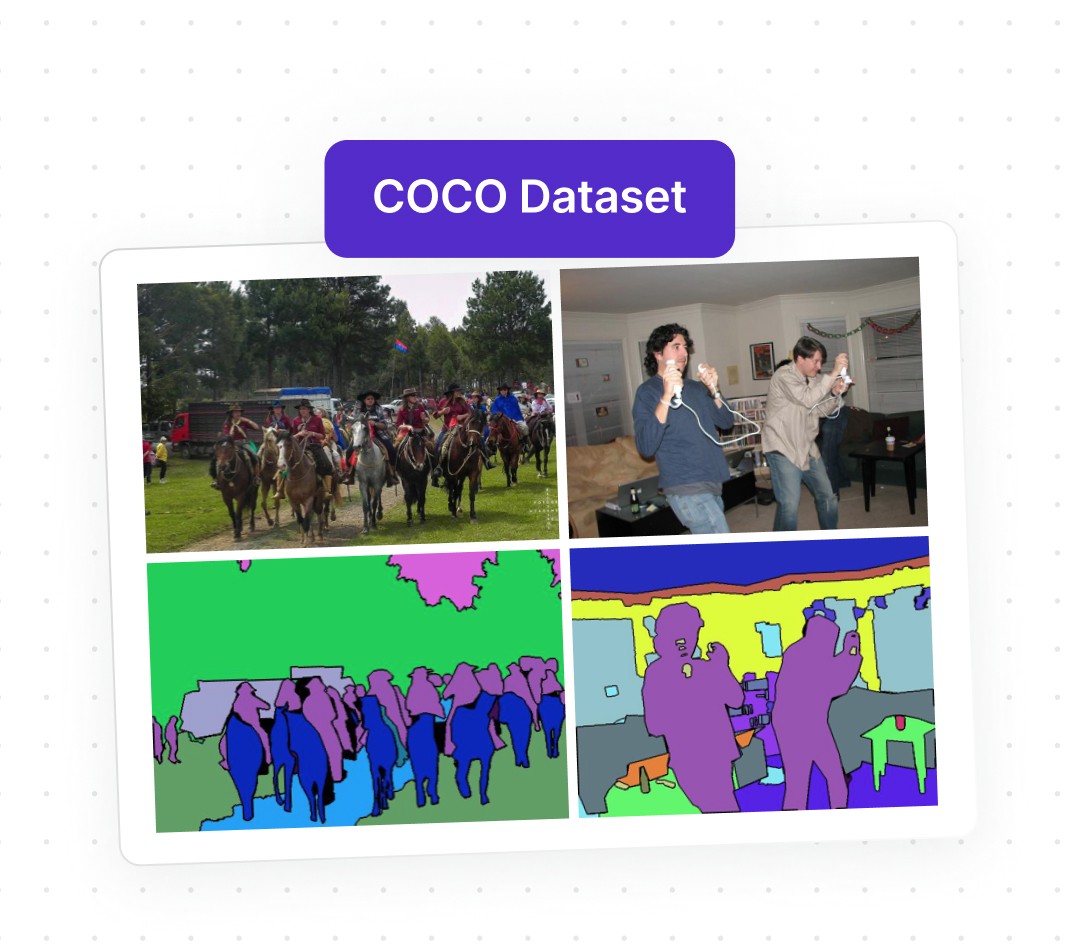

Image from COCO dataset (source)

MS COCO dataset classes

The COCO (Common Objects in Context) dataset classes are divided into two main categories: "things" and "stuff."

"Things" classes include objects easily picked up or handled, such as animals, vehicles, and household items. Examples of "things" classes in COCO are:

Person

Bicycle

Car

Motorcycle

"Stuff" classes include background or environmental items such as sky, water, and road. Examples of "stuff" classes in COCO are:

Sky

Tree

Road

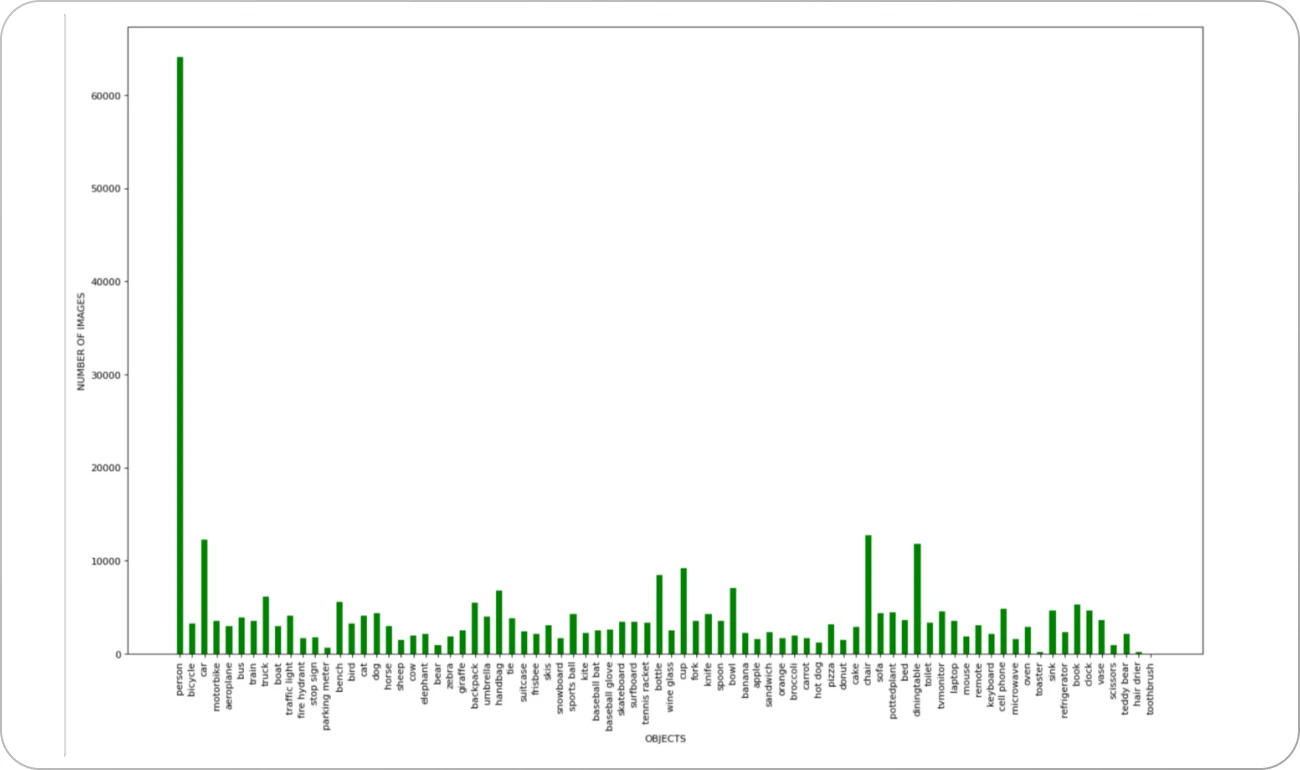

The below image represents a complete list of 80 classes that COCO has to offer.

COCO dataset class list (source)

It’s important to note that the COCO dataset suffers from inherent bias due to class imbalance.

Class imbalance happens when the number of samples in one class significantly differs from other classes. In the COCO dataset context, some objects' classes have many more image instances than others.

The class imbalance may lead to bias in the training and evaluation of machine learning models. This is because the model is exposed to more examples of the frequent classes, so it learns to recognize them better. As a result, the model may need help recognizing the less frequent classes and performing poorly in them.

Additionally, bias in the dataset can lead to the model overfit on the majority class, which means that it will perform well in this class but poorly in other classes. Several techniques can be used to mitigate the class imbalance issue, such as oversampling, undersampling, and synthetic data generation.

Class imbalance in the COCO dataset (source)

How to use the COCO dataset?

The COCO dataset serves as a baseline for computer vision to train, test, finetune, and optimize models for quicker scalability of the annotation pipeline.

Let’s see how to leverage the COCO dataset for different computer vision tasks.

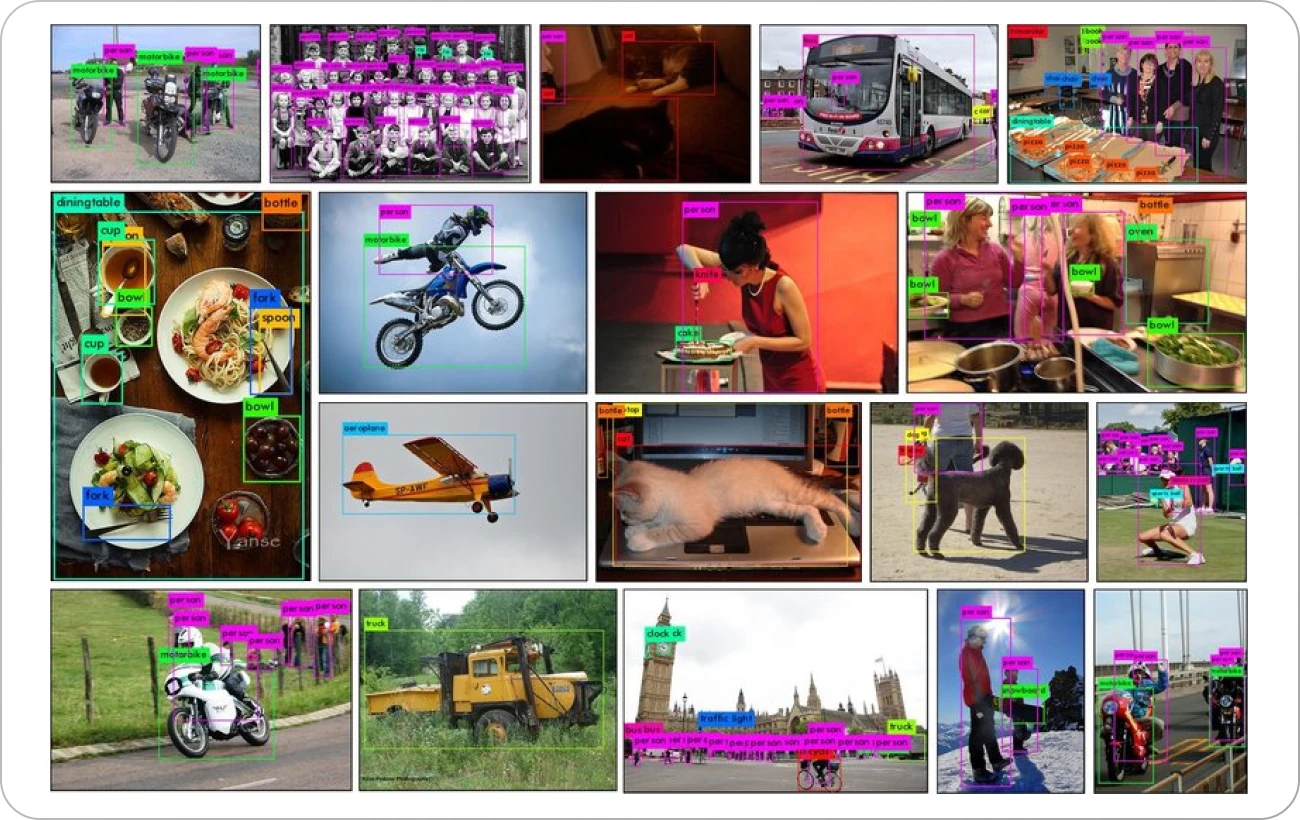

Object detection

Object detection is the most popular computer vision application. It detects objects with bounding boxes to enable their classification and localization in an image.

The COCO dataset can be used to train object detection models. The dataset provides bounding box coordinates for 80 different types of objects, which can be used to train models to detect bounding boxes and classify objects in the images.

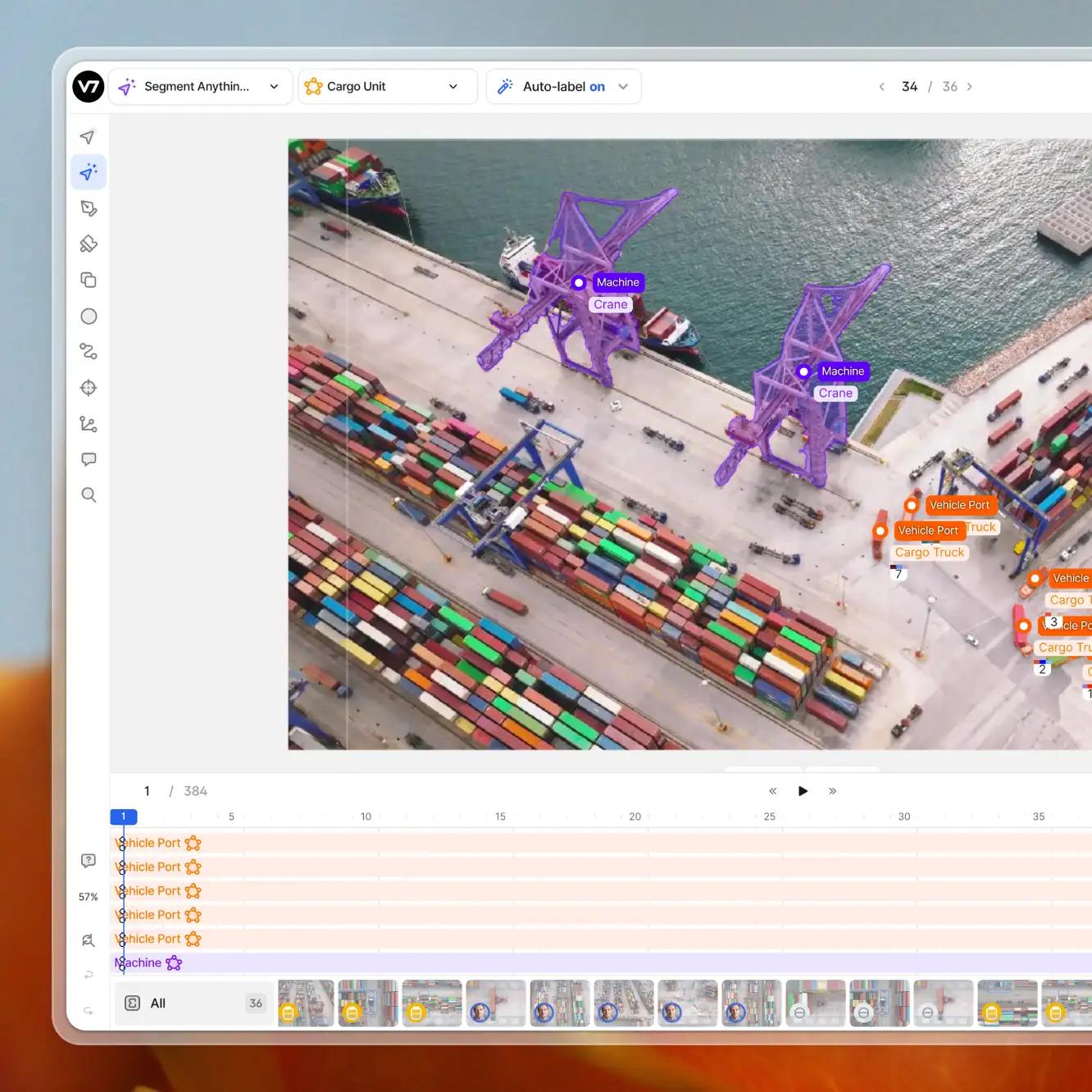

Results of Yolo v3 in the COCO test dataset (source)

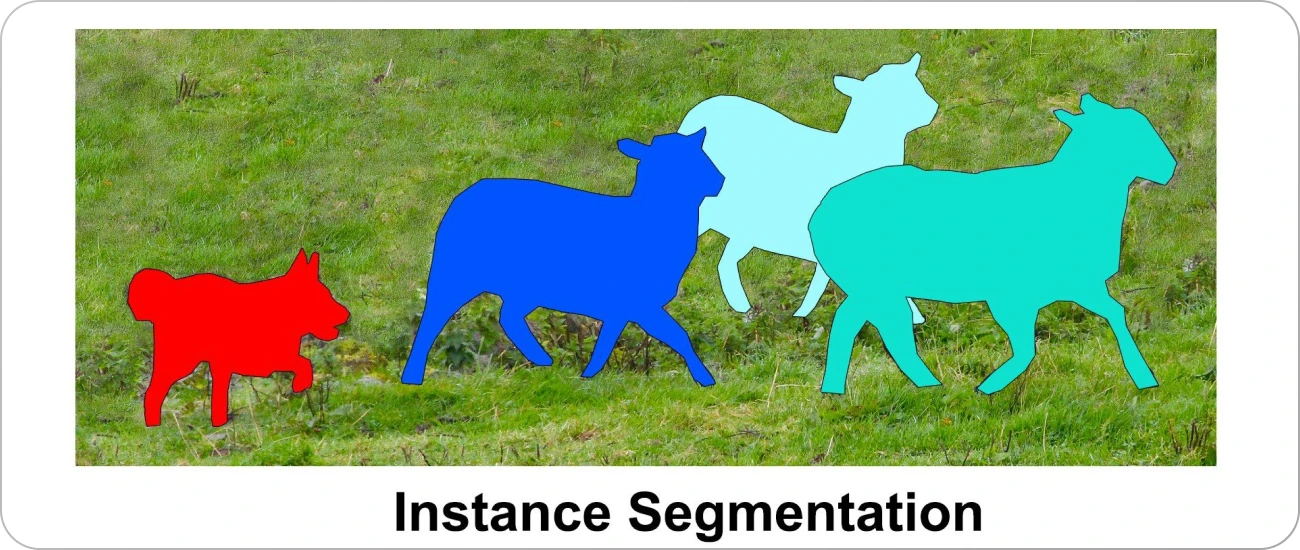

Instance segmentation

Instance segmentation is a task in computer vision that involves identifying and segmenting individual objects within an image while assigning a unique label to each instance of an object.

Instance segmentation models typically use object detection techniques, such as bounding box regression and non-maximum suppression, to first identify the locations of objects in the image. Then, the models use semantic segmentation techniques, such as Convolutional Neural Networks (CNNs), to segment the objects within the bounding boxes and assign unique labels to each instance.

The COCO dataset contains instance segmentation annotations, which can be used to train models for this task.

Instance segmentation on an image from the COCO test dataset (source)

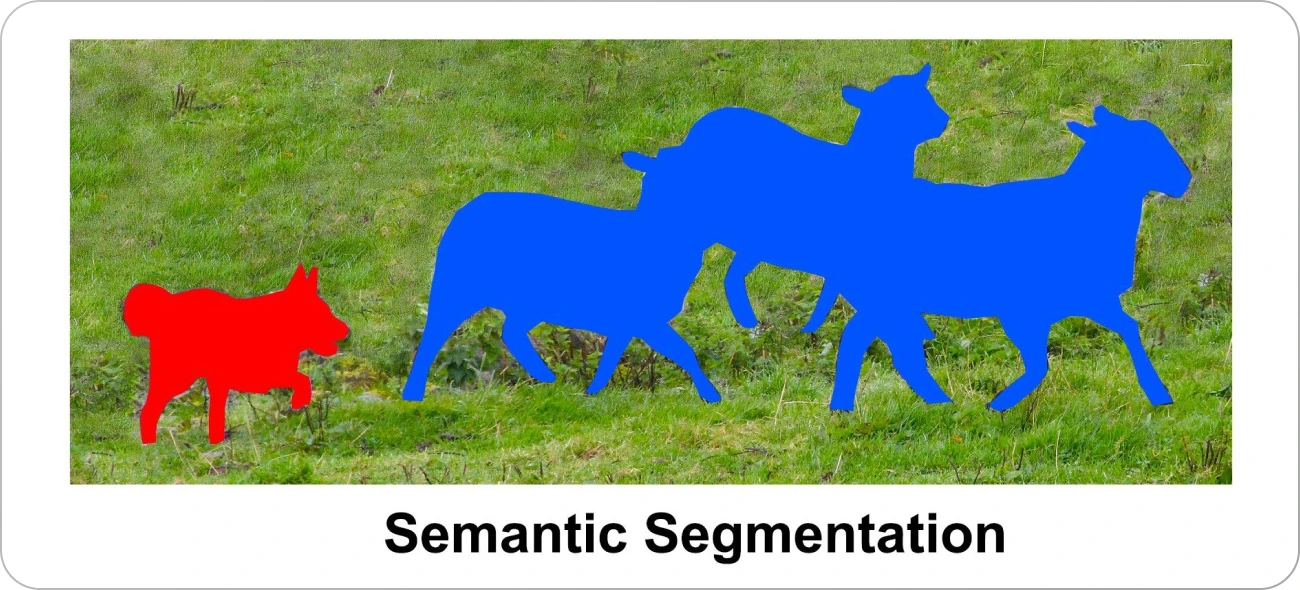

Semantic segmentation

Semantic segmentation is a computer vision task that involves classifying each pixel in an image into one of several predefined classes. It is different from instance segmentation which focuses on identifying and segmenting each object instance as a separate entity in the image.

To train a semantic segmentation model, we need a dataset that contains images with corresponding pixel-level annotations for each class in the image. These annotations are typically provided in the form of masks, where each pixel is assigned a label indicating the class to which it belongs.

For example, if we have an image of a city, semantic segmentation would involve classifying each pixel in the image as belonging to one of several classes, such as roads, buildings, trees, sky, and so on.

COCO is good for semantic segmentation since it contains many images with corresponding pixel-level annotations for each class in the image.

Once a dataset is available, a deep learning model such as a Fully Convolutional Network (FCN), U-Net, or Mask-RCNN can be trained. These models are designed to take an image as input and produce a segmentation mask as output. After training, the model can segment new images and provide accurate and detailed annotations.

Semantic segmentation on an image from the COCO test dataset (source)

Keypoint detection

Keypoint detection, also known as keypoint estimation, is a computer vision task that involves identifying specific points of interest in an image, such as the corners of an object or the joints of a person's body. These key points are typically used to represent the object or person in the image. They can be used for various applications such as object tracking, motion analysis, and human-computer interaction.

The COCO dataset includes keypoint annotations for over 250,000 people in more than 200,000 images. These annotations provide the x and y coordinates of 17 keypoints on the body, such as the right elbow, left knee, and right ankle.

Researchers and practitioners can train a deep learning model such as Multi-Person Pose Estimation (MPPE) or OpenPose on the COCO dataset. These models are designed to take an image as input and produce a set of keypoints as output.

Keypoint annotation examples in COCO dataset (source)

Panoptic segmentation

Panoptic segmentation is a computer vision task that involves identifying and segmenting all objects and backgrounds in an image, including both "things" (distinct objects) and "stuff" (amorphous regions of the image, such as sky, water, and road). It combines both instance and semantic segmentation, where instance segmentation is used to segment objects, and semantic segmentation is used to segment the background.

In the context of the COCO dataset, panoptic segmentation annotations provide complete scene segmentation, identifying items in images based on 80 "things" and 91 "stuff" categories.

The COCO dataset also includes evaluation metrics for panoptic segmentation, such as PQ (panoptic quality) and SQ (stuff quality), which are used to measure the performance of models trained on the dataset.

To use a panoptic segmentation model, we input an image. The model produces a panoptic segmentation map, an image with the exact same resolution as the input image. Still, each pixel is assigned a label indicating whether it belongs to a "thing" or a "stuff" category, as well as an instance ID for "thing" pixels.

Panoptic segmentation data annotation samples in COCO dataset (source)

Dense pose

The dense pose is a computer vision task that estimates the 3D pose of objects or people in an image. It is a challenging task as it requires not only detecting the objects but also estimating the position and orientation of each part of the object, such as the head, arms, legs, etc.

In the context of the COCO dataset, dense pose refers to the annotations provided in the dataset that map pixels in images of people to a 3D model of the human body. These annotations are provided for over 39,000 photos in the dataset and feature over 56,000 tagged persons. Each person is given an instance ID, a mapping between pixels indicating that person's body, and a template 3D model.

To use the dense pose information from the COCO dataset, researchers can train a deep learning model such as DensePose-RCNN on the dataset. The dense pose estimate includes the 3D position and orientation of each part of the human body in the image.

Visualization of annotations: Image (left), U (middle), and V (right) values for the collected points (source)

Stuff image segmentation

Semantic classes can be categorized as things (items with a clearly defined shape, such as a person or a car) or stuff (amorphous background regions, e.g., grass, sky).

Classes of “stuff” objects are important because they help to explain important parts of an image, including the type of scene, the likelihood of particular objects being present, and where they might be located (based on context, physical characteristics, materials, and geometric properties of the scene).

Annotated images from the COCO-Stuff dataset with dense pixel-level annotations for stuff and things (source)

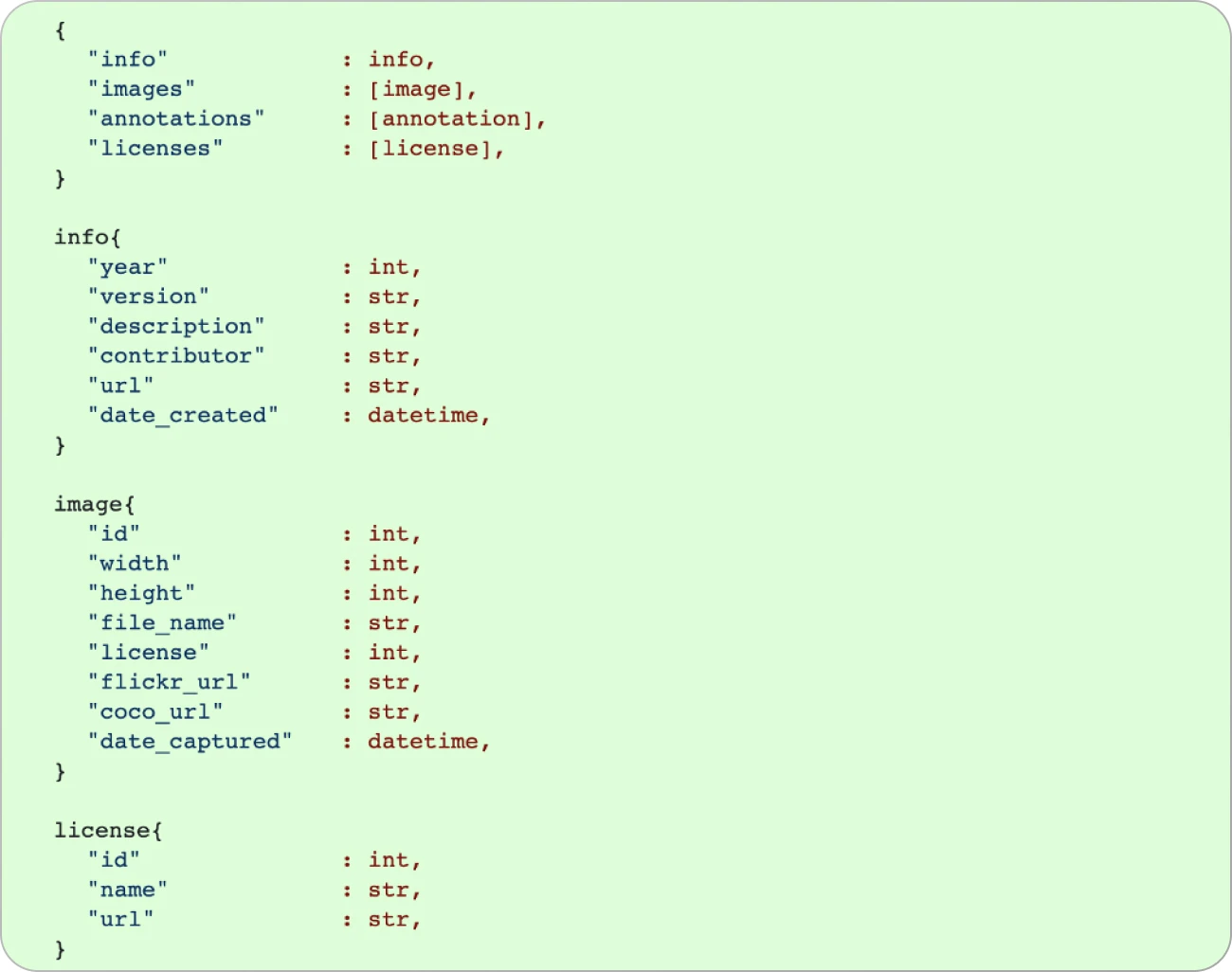

COCO dataset formats

The COCO dataset uses a JSON format that provides information about each dataset and all its images. Here is a sample of what the structure of the COCO dataset looks like:

COCO Sample JSON (source)

The COCO dataset includes two main formats: JSON and image files.

The JSON format includes the following attributes:

Info: general information about the dataset, such as version number, date created, and contributor information

Licenses: information about the licenses for the images in the dataset

Images: a list of all the images in the dataset, including the file path, width, height, and other metadata

Annotations: a list of all the object annotations for each image, including the object category, bounding box coordinates, and segmentation masks (if available)

Categories: a list of all the dataset object categories, including each category's name and ID

Each field in the JSON represents:

info: metadata about the dataset such as version, date created, and contributor information

licenses: licenses associated with the images in the dataset

images: contains details such as file path, height, width, and other metadata

annotations: contains object category, bounding box coordinates, and segmentation masks

categories: contains the name and ID of all the object categories in the dataset

The image files are the actual image files that correspond to the images in the JSON file. These files are typically provided in JPEG or PNG format and are used to display the images in the dataset.

Let’s go through the image attributes in detail.

Licenses

The licenses section provides details about the licenses of images included in the dataset, so you can understand how you are allowed to use them in your work. Below is an example of license info.

In this example, the "licenses" field is an array containing multiple license objects. Each license object has three fields: "url," "id," and "name." The "url" field contains the URL of the license, the "id" field is a unique identifier for the license, and the "name" field contains the name of the license.

Categories

The "categories" field in the COCO JSON is a list of objects that define the different categories or classes of objects in the dataset. Each object in the list contains the following fields:

"id": a unique integer identifier for the category

"name": the name of the category

"supercategory": an optional field specifying a broader category than the current one

For example, in a COCO dataset of images containing different types of vehicles, the "categories" field might look like this:

Images

The "images" field is an array that contains information about each image in the dataset. Each element in the array is a dictionary that contains the following key-value pairs:

"id": integer, a unique image id

"width": integer, the width of the image

"height": integer, the height of the image

"file_name": string, the file name of the image

"license": integer, the license id of the image

"flickr_url": string, the URL of the image on Flickr (if available)

"coco_url": string, the URL of the image on the COCO website (if available)

Here is an example of the "images" field in a COCO JSON file:

Annotations

The annotations field in the COCO JSON file is a list of annotation objects that provide detailed information about the objects in an image. Each annotation object contains information such as the object's class label, bounding box coordinates, and segmentation mask.

bbox

The "bbox" field refers to the bounding box coordinates for an object in an image. The bounding box is represented by four values: the x and y coordinates of the top-left corner and the width and height of the box. These values are all normalized, representing them as fractions of the image width and height.

Here is an example of the "bbox" field in a COCO JSON file:

In this example, the first annotation has a bounding box with the top-left corner at (0.1, 0.2) and a width and height of 0.3 and 0.4, respectively. The second annotation has a bounding box with the top-left corner at (0.5, 0.6) and a width and height of 0.7 and 0.8, respectively.

Segmentation

The segmentation field in the COCO JSON refers to the object instance segmentation masks for an image. The segmentation field is an array of dictionaries, and each dictionary represents a single object instance in the image. Each dictionary contains a "segmentation" key, an array of arrays representing the pixel-wise segmentation mask for that object instance.

Example of a segmentation field in COCO JSON:

In this example, the two object instances in the image are represented by a dictionary in the "annotations" array. The "segmentation" key in each dictionary is an array of arrays, where each array represents a set of x and y coordinates that make up the pixel-wise segmentation mask for that object instance.

The other keys in the dictionary provide additional information about the object instance, such as its bounding box, area, and category.

Key takeaways

The COCO dataset aims to push the boundaries of object recognition by incorporating it into the larger concept of understanding scenes.

COCO is a vast dataset that includes object detection, segmentation, and captioning. It has the following characteristics:

Object segmentation

Recognition within a context

Segmentation of smaller parts of objects

More than 330,000 images (> 200,000 labeled)

1.5 million object instances

80 different object categories

As the COCO dataset includes images from various backgrounds and settings, trained models can better recognize images in different contexts.