Playbooks

5 min read

—

Dec 19, 2022

Find out how to write annotation guidelines for computer vision projects. Discover best practices for preparing clear data annotation instructions for your team.

Senior Delivery Manager

Data annotation is a notoriously tricky task, and when it’s done incorrectly, it can cost you time and money.

Very often, a seemingly straightforward annotation can cause a lot of confusion. Are these two the same? Is this object in the background relevant to the task? Should I label this blurry thing as a "car?"

In many cases, these doubts could be resolved by having a comprehensive set of instructions and examples.

But—

Many machine learning teams underestimate the importance of clear annotation guidelines. And afterwards they have to manually fix hundreds of incorrect labels and masks.

In this guide, we’ll show you how to write effective annotation instructions, so you can avoid costly mistakes.

Here are five best practices for preparing high-quality annotation guidelines:

Address domain-specific knowledge gaps

Identify edge cases and show examples

Use the appropriate format and type of documentation

Leverage existing golden datasets

Offer regular feedback during the annotation process

Let’s discuss them one by one.

1. Address domain-specific knowledge gaps

This might not apply to DICOM files and annotators with a medical background, but some annotators might need special guidance on specific use-cases. Not everyone is an expert on solar panel production, agriculture, warehouse & retail industries, etc.

Your annotators might have insufficient information regarding the industry and might miss some important details that are obvious to someone with specialized knowledge.

Make sure to clarify any industry-specific terms or classes that might confuse the labelers. In short, if domain knowledge is required for the labeling project, annotation guidelines must provide all the necessary background information on the dataset.

2. Identify edge cases and show examples

Edge cases are common in data labeling as the data is usually inconsistent and there are some outliers.

The cost of ignoring edge cases is quite high. We have many cases in history where failing to include edge case scenarios resulted in wrongly trained models. For example, in 2018, Google’s autonomous vehicle crashed into a bus when it detected sandbags surrounding a storm drain and misinterpreted the situation.

There might be situations where the image is unclear, the object in the image is occluded, only partially visible etc. If we only see 5% of a cat's tail, should the annotators still label it? Is a very blurred image of a dog still a dog?

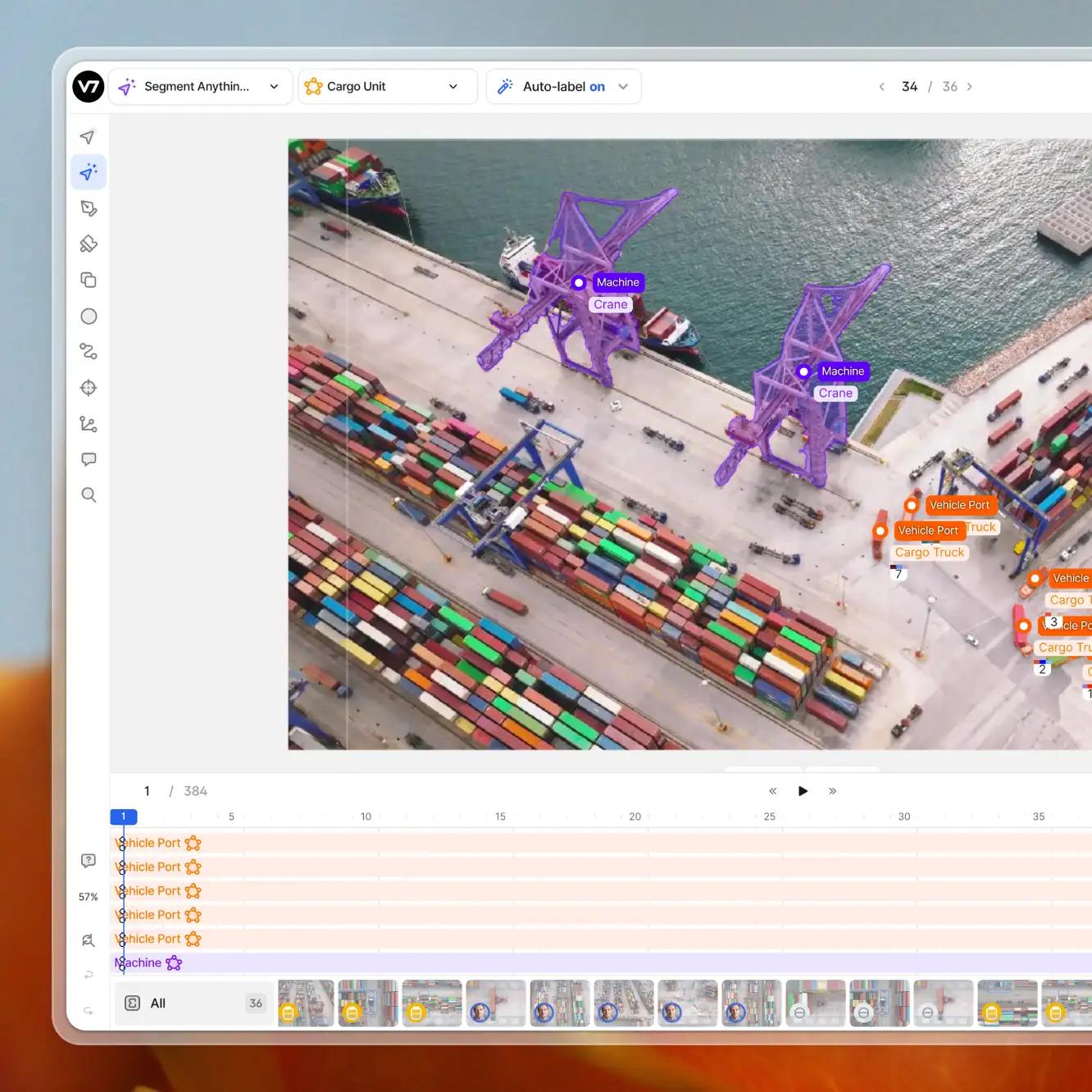

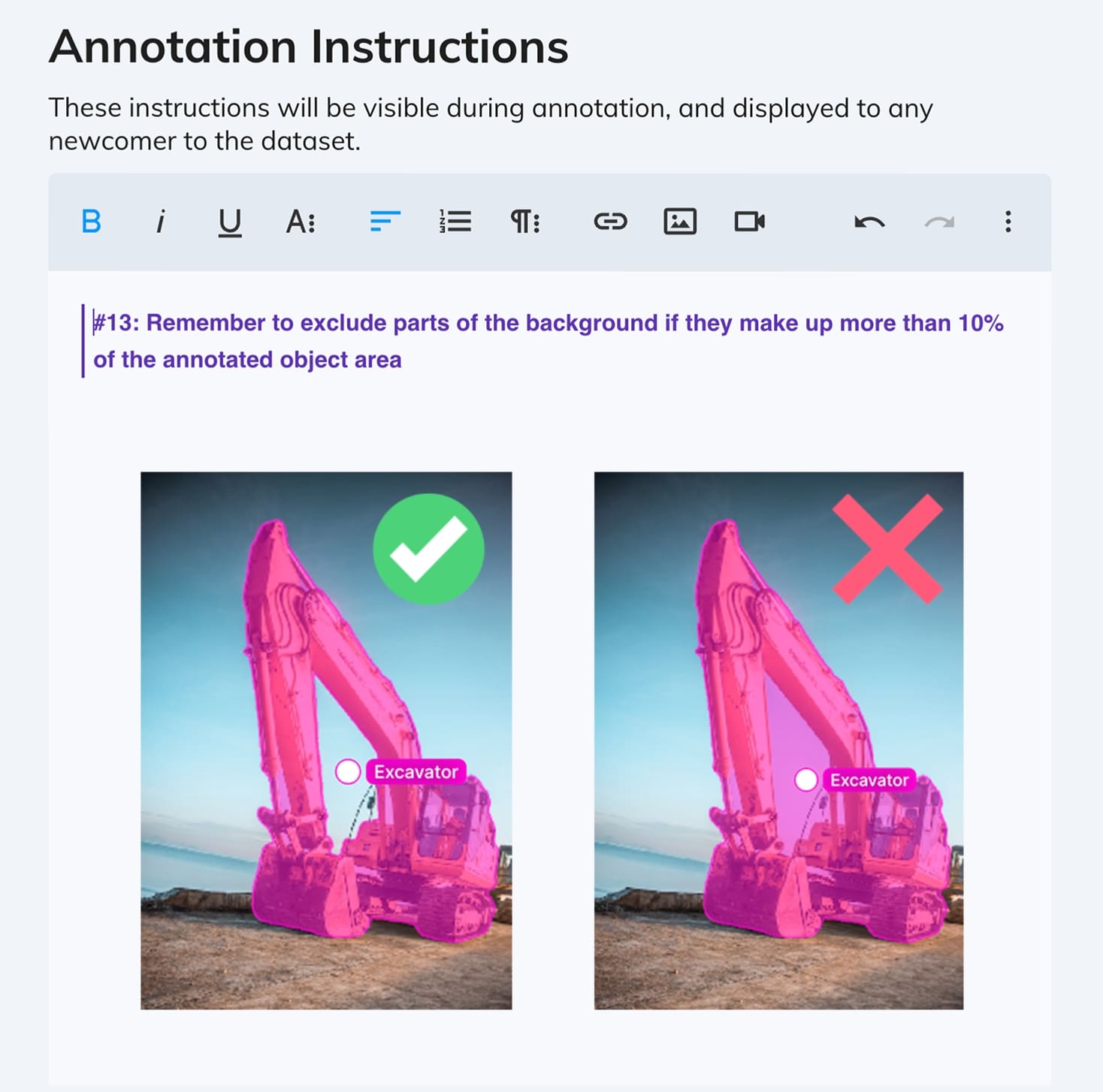

Make sure to define possible scenarios with your data, including specific metrics for such occlusions. It is important that annotation guidelines show and illustrate each and every label with examples. Images are easier to understand than complex explanations and they will help your workforce identify different scenarios.

3. Use the right format and type of documentation

Keep in mind that the length of the guidelines will depend on the complexity of the annotation project itself. Annotation instructions should always be consistent with other written documentation about the project, so avoid conflicting or confusing guidance.

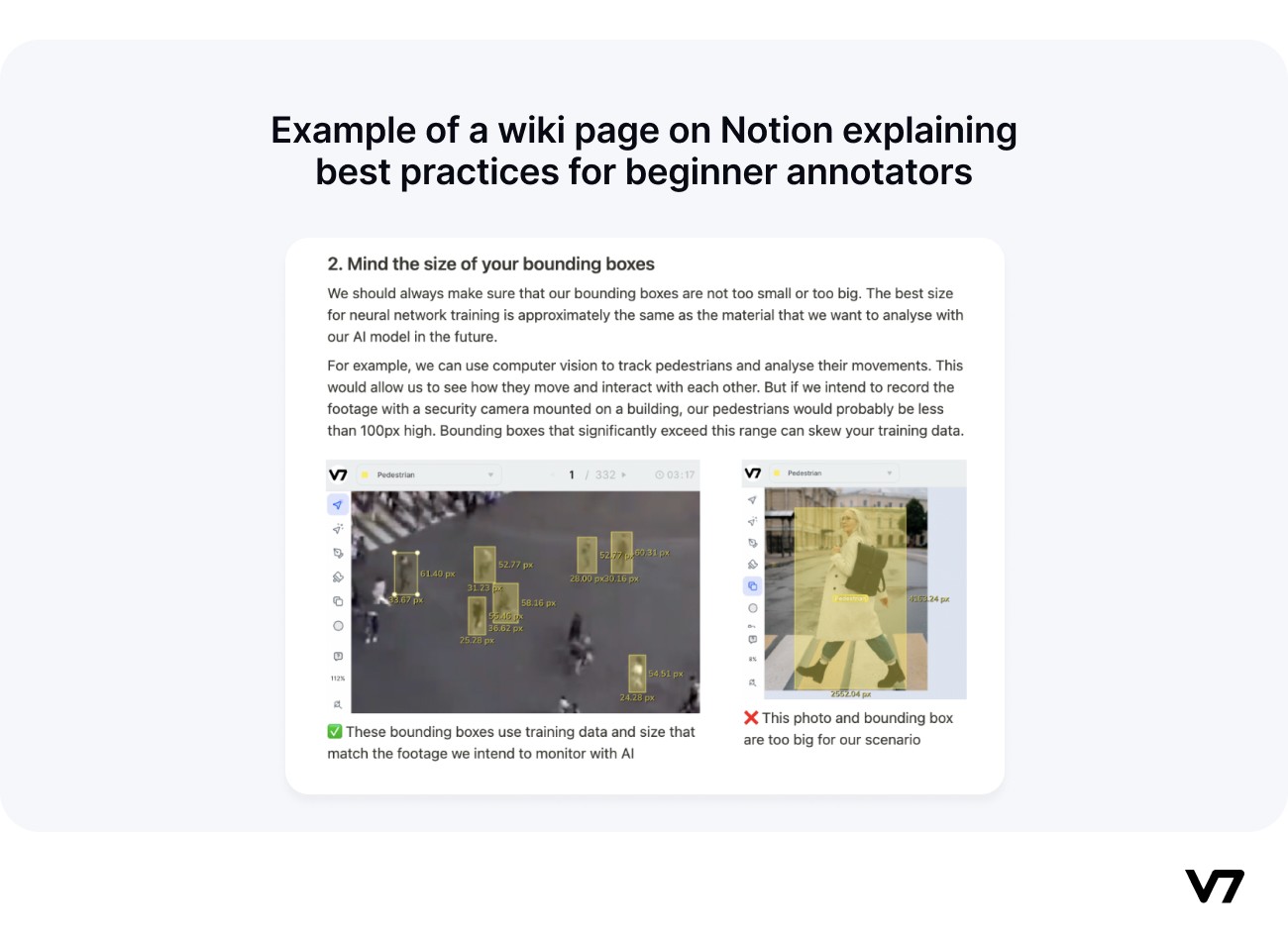

If the guidelines seem complicated or lengthy, you may want to use additional wiki software such as Notion. The information within the document MUST be searchable and discoverable to help the workforce quickly find the relevant guidance during the annotation process.

Within the guideline document itself, page numbers and a table of contents with hyperlinks can be useful for navigation. For example, you can create a dedicated section for each annotation class. In addition, some representative examples can be embedded alongside the instructions.

Last but not least, it is always good to explain the purpose of the annotation project if it’s not confidential. It is extremely useful to know the end-goals. What is the purpose of the data? What type of model is going to be trained on it? What will be the model used for? If you can’t answer these questions due to confidentiality, make sure to sign an NDA with your business partner first.

4. Leverage existing golden datasets

In computer vision, a golden dataset is a dataset that is typically used as a benchmark for performance evaluation. It uses quality examples and is labeled manually by experts. Golden datasets are sort of cheat codes which have been previously prepared by annotation project managers.

Using golden datasets is an ideal way to train the workforce. They are particularly useful for object detection projects with strictly defined ways to annotate. Golden datasets shorten the feedback loop between annotation project managers and the workforce. That’s because the correct annotations for given classes have already been determined in advance.

On the downside, creating golden datasets for training requires a significant amount of time and effort. You have to ensure that there is a sufficient number and variety of golden tasks to be representative of the actual data.

Moreover, the guidelines should state whether annotations can be discussed among annotators, including the access to golden datasets. If annotation consensus stages (available on V7!) are being leveraged for quality assurance, it is necessary to state in the guidelines that annotators cannot discuss their work items with one another and that they should not ask for guidance from annotation project managers beyond the written guidelines or information provided beforehand.

5. Offer regular feedback during the annotation process

It’s advisable to iterate at the start of the labeling project and provide written feedback for the workforce as soon as possible. That way, you will not only have actual labeling instructions, but also a document with FAQs for any new-joiners. This also helps in avoiding systematic errors during the labeling process and generally makes it easier to observe the results from the beginning of the annotation project.

It’s important to explain why the annotation is either correct or incorrect. This will allow your annotators to learn quickly and become more efficient.

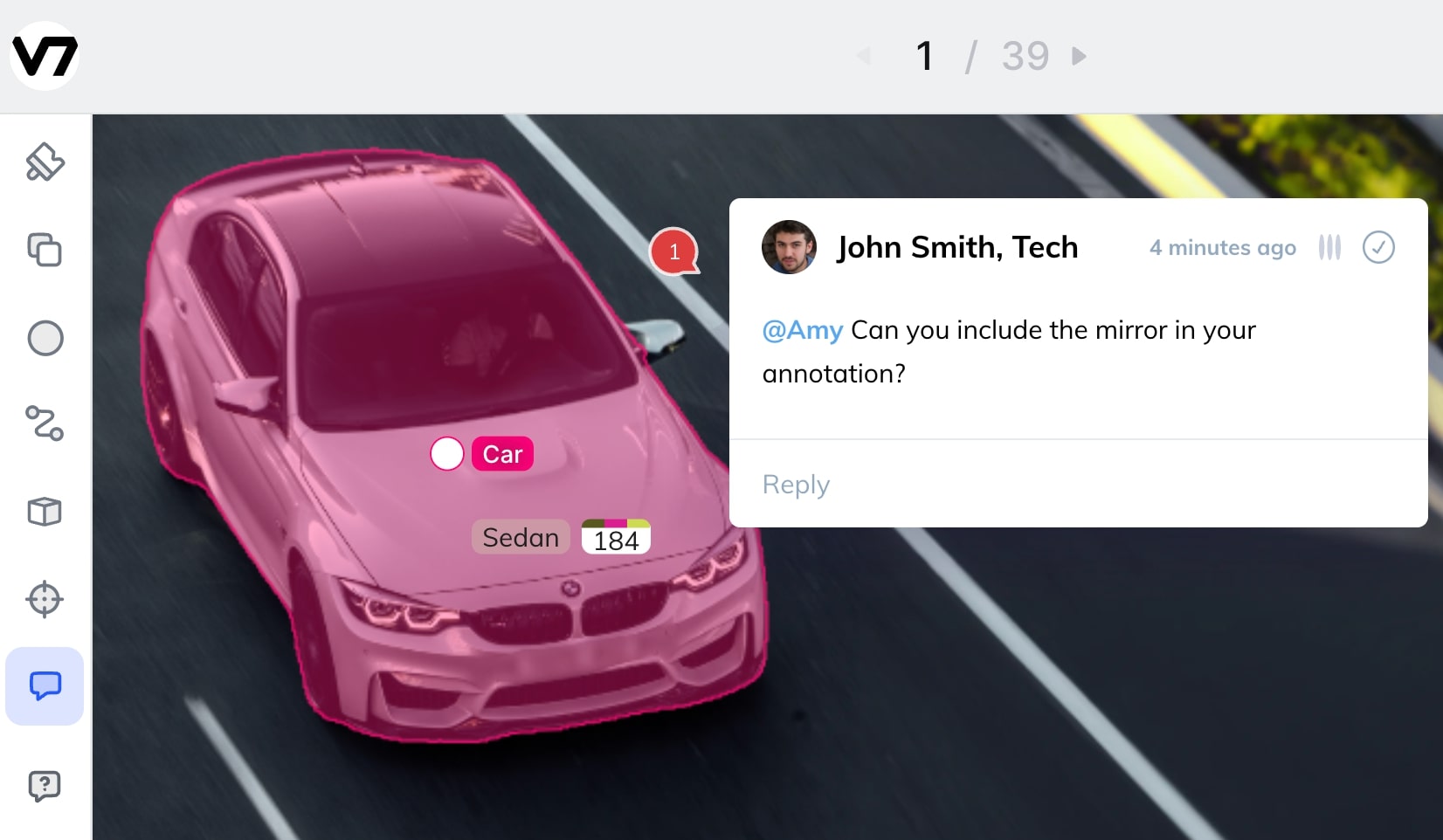

In addition to providing feedback, annotation workforce managers should have an open line of communication. Annotators should be encouraged to ask questions, voice their concerns and make suggestions. This will help you build a better relationship with your workforce and also ensure that the annotation project is successful.

Conclusion

Writing annotation instructions is a critical step in the data labeling process. It should not be overlooked. Following best practices such as addressing domain-specific knowledge gaps, providing examples, and offering regular feedback during the annotation process can ensure the high-quality of your training data.

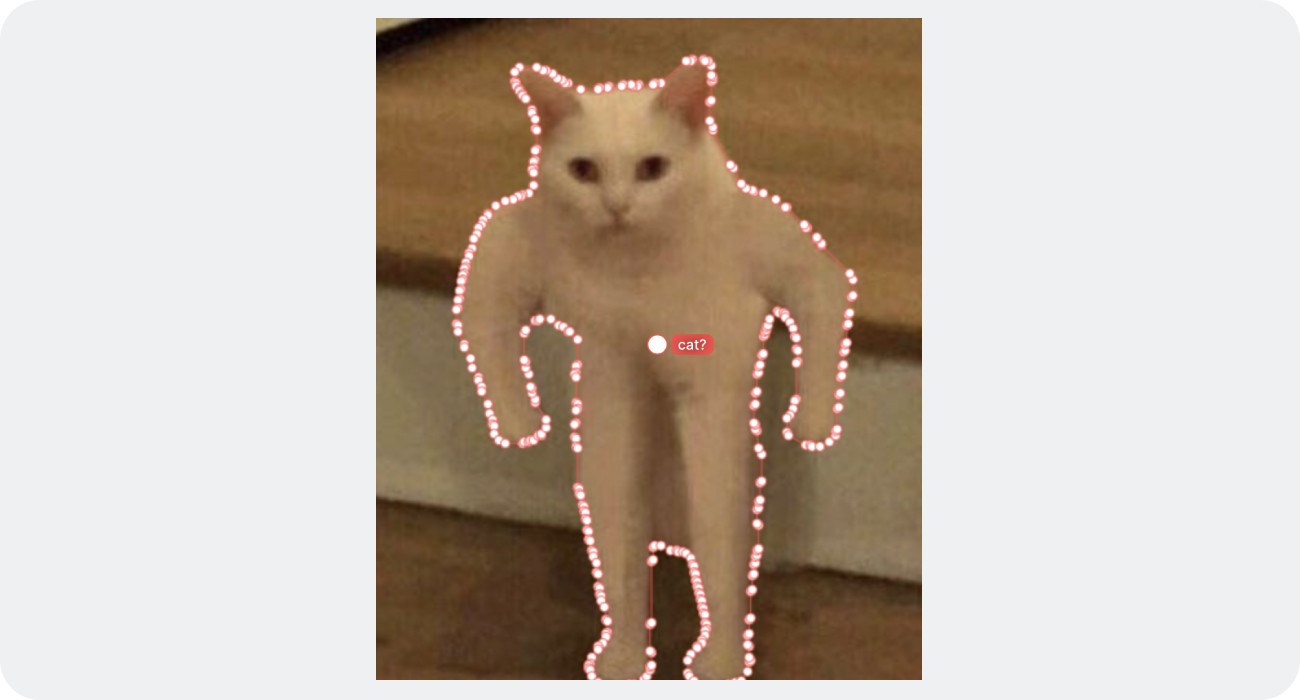

After all, you don’t want to accidentally end up with a dataset that looks like this:

By taking the time to create robust annotation instructions, machine learning teams can save themselves time and money in the long run.